1. Introduction

1.1. Background

About 70% of the Earth's surface is covered by water, and approximately 90% of all transport is waterborne (Wu, 2020). However, as of the year 2012, global shipping emissions were approximately 938 million tonnes of CO2 and 961 million tonnes of CO2e combining CO2, CH4 and N2O, signifying around 2.2% of global anthropogenic Greenhouse Gases (GHGs) (Smith et al., 2014). By 2050, the maritime transport segment needs to reduce its total annual GHG emissionsby 50% compared to 2008 to limit the global temperature rise to no more than 2 °C above the pre-industrial level (Cames et al., 2015). In this context, waterborne transport's role becomes critical, revealing an urge to promote sustainable shipping.

Optimising maritime transport has a long history and has been an ongoing task for ship operators, designers, and builders. Since hundreds of years ago, naval architects started to seek better hull forms so the ships would feel less resistance when operating in water. Although those approaches are mainly empirical and based on simplified classic physics, they did establish the fundamental theories of naval architecture, significantly improving hull design and instigating several centuries of blossoming maritime transport. These improvements were then accompanied by the optimisation of marine engines, since the industrial revolution.

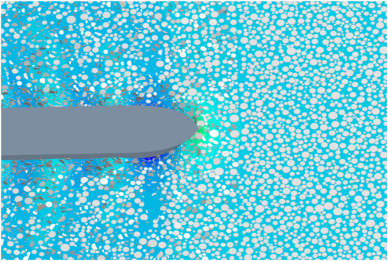

More recently, the development of high-fidelity Computational Fluid Dynamics(CFD) techniques and High-Performance Computing (HPC) units have allowed the simulation of very complex maritime scenarios such as vessels going in rough seas (Jasak, 2017; Dashtimanesh et al., 2020), vessels going in sea ice (Fig. 1) (Huang et al., 2020), water-entry processes (Huang et al., 2021a), and the flow control of hydrofoils (Pena et al., 2019). As a particular example, the work of Pena et al., 2020a, 2020b demonstrated the turbulent intensity in the flow around a full-scale cargo ship for the first time (Fig. 2), which is facilitating the development of an energy-saving device that has been shown to significantly reduce ship resistance (Pena, 2020). Another instance is that Ni et al. (2020)simulated the icebreaking process of a ship in level ice, which for the first time achieved a level of fidelity where the overall icebreaking process is coupled with the water underneath; such simulations are important for reducing the GHG, as the energy consumption of icebreakers is extremely high. These computational techniques have also been incorporated within ship design processes that have helped to find the optimal configuration without the necessity of conducting extensive experiments.

Fig. 1. Simulation of a ship advancing in a sea infested by ice floes (picture produced by Luofeng Huang using STAR-CCM+).

Fig. 1. Simulation of a ship advancing in a sea infested by ice floes (picture produced by Luofeng Huang using STAR-CCM+). Fig. 2. Simulation of the turbulent flow around a hull (picture produced by Blanca Pena using STAR-CCM+).

Fig. 2. Simulation of the turbulent flow around a hull (picture produced by Blanca Pena using STAR-CCM+).1.2. The development of machine learning in shipping

In the meantime, enhancement in satellite observation has allowed ships to plan their voyages based on weather observation and prediction, improving maritime sustainability and safety by choosing the most optimal route (Li et al., 2020a). During the last decade, big data in this field has been established based on geospatial data systems such as the Copernicus Marine Environment Monitoring Service (CMEMS) (von Schuckmann et al., 2018). Those systems integrate historical weather data and provide future projections to support voyage planning. On top of that, ship fuel consumption corresponding to specific weather conditions can also be recorded. Traditional manual methods may no longer handle such data; instead, ML is used to ascertain efficient integrated operations that can reduce GHG emissions. As symbolised in Fig. 3The concept of ML in the shipping industry relies on a data stream from and to the ships, which can be analysed directly through a ship bridge system or indirectly through separate computers (CIAOTECH Srl, 2020). This process can be further enhanced by incorporating data in real-time to inform dynamics and control, decision support, and performance optimisation (Anderlini et al., 2018a; Rehman et al., 2021). Apart from real-world data, CFD can be a cost-effective alternative to provide valuable data for trainomg ML and other optimising algorithms (Garnier et al., 2021; Pena and Huang, 2021). Therefore, Advanced Computing techniques, including the combination of CFD, algorithm optimisation and ML, have stepped into the shipping industry and are transforming it in a way that has never been seen before.

Fig. 3. An conceptual illustration of big-data-oriented shipping (CIAOTECH Srl, 2020).

Fig. 3. An conceptual illustration of big-data-oriented shipping (CIAOTECH Srl, 2020).The reason for shipping to need ML in the future is that ship performance is influenced by numerous factors, such as trim, draft, pitch angles, winds, waves, currents, biofouling, engine efficiency, alongside extensive hull geometric parameters. The links between these parameters are not straightforward. This makes it hard to use traditional regression techniques to perform holistic design, prediction, analysis and optimisation. The main advantage of ML is the ability to decode complex patterns, which makes it suitable for advanced shipping applications.

Compared with CFD and experiments in ship design, ML can be a complement. Industrial members (e.g. a classification organisation or a ship design company) conduct numerous projects per year which can provide quality CFD and measurement data to train ML models to have sufficient accuracy. It is envisioned that ML will have the independent ability to provide reliable assessment after sufficient training, and its capability can still be expanded through more and more projects over time. This means that a wide range of ship parameters will be included with confidence. The early design stages will see significant benefits by using ML, where vast configurations need to be tested that would be prohibitive to obtain using CFD or experiments. ML can provide rapid estimates with nonlinearities accounted for, overcoming inaccuracies in linear analytical methods that are currently used in early design stages.

For ship operation, ship route optimisation can be achieved through ML models by connecting the ship parameters with real-time climate data. The rapidity of ML will enable route optimisation in real time. Operational strategies will be improved as ML can inform these based on the engine and sea conditions. Moreover, ML models will enable continuous monitoring of voyages, providing the ability to report risks, structural fatigue and engine faults, thus improving maintenance and repair strategies over the lifecycle.

1.3. Scope and literature scan approach of this review

To facilitate the sustainable development of shipping, this article reviews how ML and its combination with other Advanced Computing have been applied in this field. Three main areas that have been found particularly relevant will be discussed in detail: ship design, operational performance, and voyage planning. The work aims to demonstrate how these techniques can be used to inform the enhancement of waterborne efficiency and eventually help achieve a zero-emission future.

The literature scan for this work was performed based on Web of Science using the words co-occurrence method, where the searching condition was “Machine Learning” occurring together with “Ship” in any paper. In total, 1050 papers were found and their distribution is shown in Fig. 4.

Fig. 4. Literature distribution of ML applications in ships, based on Web of Science (data accessed in September 2022).

Fig. 4. Literature distribution of ML applications in ships, based on Web of Science (data accessed in September 2022).Overall, it can be seen that this research field mostly started after 2015, with a majority of papers published after 2020. The keywords were then used to guide the applications of different ML methods in the three research categories (design, operational performance and voyage planning). At least one paper is detailedly reviewed for each method used in each research category. For papers using a similar approach, the selection standard is sufficient training data, which is important for the comparative purpose of this review; this will be further discussed in the following sections.

This paper is organised as follows: Section 2 covers the fundamentals of ML currently in use or with the potential to be used in the marine industry, as this is an emerging branch that is less well known than traditional methods. Section 3 reviews the marine industry's ML advancements with respect to ship design, operational performance, and route optimisation. Section 4 provides a discussion on ML's achievement in sustainable shipping, and points out the aspects that require special attention and future work. Section 5 summarises this review with its key points.

2. Machine learning fundamentals

As introduced by Kretschmann (2020), ML consists of different algorithms that learn dependencies through pattern recognition in data sets and use the identified patterns to make predictions (Nelli, 2018). The basis for solving a task is a dataset in which ML methods identify underlying relationships to give generalising rules used for completing a given task (Chollet, 2018). ML methods thus are particularly useful in determining correlations and patterns in complex data sets. In comparison to statistical methods, an advantage of ML is that it can represent both linear and nonlinear relationships without being bound by restrictive premises or assumptions of some statistical tests (Poh et al., 2018); in comparison with high-order methods such as CFD, ML can overcome the limitation of computational speed and is incorporable with real-time applications (Anderlini et al., 2020a). However, a prerequisite of any ML model is a sufficient and informative set of data to learn the inherent correlations from. In addition, a primary drawback of ML algorithms is a lack of physics-informed foundations, thus containing large uncertainty in the prediction – further discussion on this limitation will be given in Section 4.

Depending on how the learning task is achieved, ML algorithms can be classified into Supervised Learning, Unsupervised Learning, Semi-supervised Learning and Reinforcement Learning. A detailed tree diagram is given in Fig. 5and more details about each technique are covered in the following sub-sections.

Fig. 5. A tree diagram for the classification of Machine Learning, inspired by (Sarker, 2021).

Fig. 5. A tree diagram for the classification of Machine Learning, inspired by (Sarker, 2021).2.1. Supervised learning

Supervised learning consists of learning the mapping between input and output variables given sampled input-output pairs (Meijering, 2002), see Fig. 6. As labelled data is required for the process to achieve the desired goal, supervised learning is considered a task-driven approach. This is useful when a certain pattern of the data is already known, and the prediction will be more specific as undesired relationships can be filtered. There can be two types of outcomes: numerical and categorical. Numerical outputs are given as exact numbers, and categorical outputs are given as a classification, e.g. whether a ship's engine is faulty or not.

Fig. 6. Flowchart of supervised learning methods.

Fig. 6. Flowchart of supervised learning methods.Supervised learning methods date back to linear regression solutions proposed by Carl Friedrich Gauss (Meijering, 2002) and later logistic regression. Supervised learning algorithms can also be classified into two subcategories: parametric and non-parametric models. On the one hand, parametric models have a fixed number of parameters with classical methods, including linear regression, logistic regression, Least Absolute Shrinkage and Selection Operator(LASSO) (Park and Casella, 2008), Linear Discriminant Analysis (LDA) (Izenman, 2013) and ensembles of boosted (Chen and Guestrin, 2016) and bagged trees (Biau and Scornet, 2016). On the other hand, in a non-parametric model, the parameters are not given beforehand, and the ML model may identify influencial parameters during the training process. Some non-parametric models include Gaussian Process (GP), k-Nearest Neighbours (KNN) and Support Vector Machines (SVM) (Hearst et al., 1998). Parametric models are more flexible and typically present higher accuracy for small datasets, but their training cost becomes excessive as dataset size increases.

Since 2012 when AlexNet was introduced (Krizhevsky et al., 2012), classical machine learning algorithms have been superseded by deep learning methods based on Neural Networks (NN) (LeCun et al., 2015). Artificial neural networksare inspired by the biological brain and comprise multiple artificial neurons which receive a signal, process it and send it to neurons connected to it. The signal, which is represented by a number, is computed by some nonlinear functions and is assigned a weight whose value is adjusted as learning takes place (Yegnanarayana, 2009). Neurons are typically grouped into layers and the signals travel from the input layer to the output layer (a signal can pass layers multiple times). Networks with multiple hidden layers are called Deep Neural Networks (DNN) and the subject is known as deep learning. Stacking multiple layers with nonlinear activation functions enables different levels of abstraction to extract the hidden connections in the data to produce much higher prediction accuracy over classical machine learning algorithms. For instance, AlexNet provided a 9.4% improvement in prediction accuracy for image classification over the 2009 ImageNet dataset comprising 1.5M sample points and 1000 categories (Krizhevsky et al., 2012). In 2015, ResNet further improved accuracy by 12.8%–96.4% with 152 layers (He et al., 2016). Although deeper networks learn better representations, they are much more complex, e.g. AlexNet has 60,954,656 weights and 612,432,416 connections. Hence, practical and theoretical improvements have been made over the years to improve the computational performance of DNN to keep training costs within acceptable limits even for the largest datasets. For example, Amazon's reference dataset with 82M product reviews (i.e., samples) is a benchmark for natural language processing, which is a typical example of improving algorithms to handle a very large dataset with low training costs (He and McAuley, 2016). This has led to the introduction of different types of NN. The state-of-the-art methods and implementations can be found in (Zhang et al., 2021).

Typical current classification tasks involve image recognition, object detection, text digitalisation, video captioning, sentiment analysis, recommendation systems and threat detection. Deep learning is commonly used in regression tasks for market forecasting, logistics and operations planning. The last layers of the classification or regression task are typically accomplished by simple feedforward neural networks, which comprise layers with feedforward connections (Goodfellow et al., 2014). Computer vision tasks typically involve the use of Convolutional Neural Networks (CNN), with layers of incrementally decreasing size for all but the few last layers to pool resources and reduce the computational effort by sharing weights, as images can have a large number of pixels and three colour channels leading to a very large input space (Krizhevsky et al., 2012). Time series data, which is of particular interest for engineering applications, can be modelled with recurrent neural networks, where the output of the neurons is fed back into the network to maintain a memory effect. Long short-term memory is particularly popular as it solves problems associated with vanishing gradients (Hochreiter and Schmidhuber, 1997). However, recently, they have been replaced by attention-based methods, or transformers, for natural language processing whose training can be parallelised (Vaswani et al., 2017).

Supervised learning solutions typically present the highest prediction accuracy, but require correct data labelling, which can be extremely expensive for data-intensive applications.

2.2. Unsupervised learning

Unsupervised learning finds structures in non-labelled data with no human supervision required during the training, see Fig. 7. This fact makes unsupervised learning attractive in applications with a large amount of data or where data labels are simply not available (Barlow, 1989). The most known unsupervised learning techniques are clustering and dimensionality reduction.

Fig. 7. Flowchart of unsupervised learning methods.

Fig. 7. Flowchart of unsupervised learning methods.2.2.1. Clustering

Clustering or cluster analysis is a well-known unsupervised learning technique that can organise the data in clusters or groups by identifying similarities. This technique is particularly useful to identify underlying patterns which might not be visible or logical to humans. Clustering is mainly used for pattern recognition, market research, image analysis, information retrieval, robotics, or even crime analysis (Celebi and Aydin, 2016). The most known classical algorithms are k-Means Clustering (KMC) and KNN (Celebi and Aydin, 2016). KMC partitions data into k clusters; an observation belongs to the cluster with the nearest centroid, resulting in partitioning data space into Voronoi cells. On the other hand, KNN is a non-parametric model used as a classifier for clustering data; it looks at the points closest to the nearest centroid (Gareth et al., 2013). More options here can be the Expectation-Maximization (EM) method (Murray and Perera, 2021) and the DBSCAN method (Liu et al., 2021) which are increasingly active.

2.2.2. Dimensionality reduction

Dimensionality reduction is particularly useful in extracting the principal features in extremely large datasets with a complex input space. Principal Component Analysis (PCA) is a common classical method to identify the correlation between features and obtain lower-dimensional data while preserving as much of the data's variation as possible (Celebi and Aydin, 2016). Popular deep learning solutions for dimensionality reduction comprise autoencoders, which includes an encoder DNN presenting layers of decreasing side to extract the fundamental features in a latent space (Kingma and Welling, 2013). The latent space is then reconstructed into the original signals in a decoder that mirrors the encoder. Hence, the autoencoder is trained to reproduce the input signal in its output. Variational autoencoders, whose latent space is a probabilistic function, are particularly effective and represent the state of the art (Kingma and Welling, 2019). Autoencoders are extremely useful for anomaly detection and denoising the original signals.

2.3. Semi-supervised learning

Semi-supervised learning is an approach to machine learning that combines a small amount of labelled data with many unlabelled data used during training, see Fig. 8. Semi-supervised learning falls between unsupervised learning (with no labelled training data) and supervised learning (with only labelled training data). Unlabelled data, when used in conjunction with a small amount of labelled data, can produce considerable improvement in learning accuracy (Zhu and Goldberg, 2009). The acquisition of labelled data for a learning problem often requires a skilled human agent (e.g. transcribing an audio segment) or a physical experiment (e.g. determining the 3D structure of a protein or determining whether there is oil at a particular location). Thus, the cost associated with the labelling process may render large, fully labelled training sets, whereas the acquisition of unlabelled data is relatively inexpensive. In such situations, semi-supervised learning can be of great practical value. Semi-supervised learning is also of theoretical interest in machine learning as a model for human learning.

Fig. 8. Flowchart of semi-supervised learning methods.

Fig. 8. Flowchart of semi-supervised learning methods.Generative models are the most known semi-supervised learning approaches and include generative adversarial networks (Goodfellow et al., 2014) and variational autoencoders (Kingma and Welling, 2019). Generative approaches to statistical learning first seek to estimate the distribution of data points belonging to each class. The probability that a given point has a label is then proportional to Bayes’ rule. Semi-supervised learning with generative models can be viewed either as an extension of supervised learning (classification plus information) or as an extension of unsupervised learning (clustering plus some labels). Common applications include fault diagnostics to include unseen failure modes and editing of images, videos and text.

2.4. Reinforcement learning

Reinforcement Learning (RL) implies goal-directed interactions of a software agent with its environment, as shown in Fig. 9. Unlike in supervised learning, reinforcement learning paradigms do not need labelled input/output pairs to be presented, and it does not need sub-optimal actions to be explicitly corrected. Instead, the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge) (Kaelbling et al., 1996).

Fig. 9. Flowchart of reinforcement learning methods.

Fig. 9. Flowchart of reinforcement learning methods.The RL environment can be formalised as a Markov Decision Process (MDP). The central elements of RL are the agent's states, an environment, a set of actions and a reward after the transition from the state to a new state. An RL agent interacts with its environment in discrete time steps. At each time, the agent receives the current state and reward. It then chooses an action from the set of available actions and then sends it to the environment. The environment moves to a new state and the reward associated with the transition is determined. The goal of a reinforcement learning agent is to learn a policy that maximises the expected cumulative reward.

Deep learning has revolutionised RL research by enabling the treatment of continuous state and action spaces as well as the incorporation of computer vision (Levine et al., 2020). Hence, deep reinforcement learning (DRL) is actively being investigated for decision making and robotics applications, including autonomous driving, humanoid robot locomotion, robot manipulation and computer games. Offline DRL, where the agent learns from pre-sampled data similarly to supervised learning, is at present a topic of high interest to mitigate the risks associated with exploration.

3. Current applications

3.1. Ship design

In terms of naval architecture, the design of a vessel constitutes an essential task to achieve superior hydrodynamic performance to minimise fuel consumption. Designing a ship relies on sophisticated experimental and computational techniques for hydrodynamic performance evaluation of multiple hull sizes and shapes. Traditionally, naval architects can perform regression analyses to predict a new ship's hydrodynamic performance based on existing hull forms. This approach can provide an approximate estimate of the new ship's performance, however, may become incapable at later stages of the design since such a regression approach normally only consider several primary parameters but does not consider advanced parameters such as highly-nonlinear hull surfaces. During a later optimisation process, which is a critical step in improving the performance of vessels, ship designers would have to rely on their personal experience to revise the hull plan. This largely depends on the designer's skills and makes it hard to find the optimal configuration.

Therefore, a strong impetus has aimed at turning a tedious ship design procedure into a much simpler process. Such efforts have been facilitated by the fast development of Artificial Intelligence (AI) and the availability of HPC, so that now an ML-assisted ship design process has become realistic. The first application of ML in this regard could be considered Holtrop and Mennen's empirical algorithms that present a statistical method to approximate the ship resistance based on the results of multiple model basin tests (Holtrop and Mennen, 1982; Holtrop, 1984). This method is only applicable to hull forms resembling an average ship described by the main hull dimensions and form coefficients used to build the regression. Because of the limitation of this method, it is essential to emphasise that the calculated resistance tends to deviate from the actual hull resistance significantly (Holtrop and Mennen, 1982; Holtrop, 1984). Therefore, this method is only recommended during the concept design stage.

Other pioneers in the area of assisted ship design were Ray et al. (1995). They for the first time presented a global optimum strategy in ship design. Specifically, separate optimisations in resistance, weight, freeboard, building cost, and stability were integrated together by developing a system handler. Classic naval architecture equations were used in the calculation, and constraints were added according to the sailing requirements of a 136-TEU containership. Nonetheless, the optimisation objectives share equivalent weights in the decision-making process, and a more intelligent decision-making strategy was pointed out by the authors as future work. Another limitation of this work was that only one candidate hull was considered due to the technology status of that time.

Yu and Wang (2018) revolutionised the ship design process by creating extensive hull geometries using a principal-component analysis approach. Extensive hull forms were evaluated for their hydrodynamic performance, and the results were then used to train a DNN to accurately establish the relation between different hull forms and their associated performances. Then, based on the fast, parallel DNN-based hull-form evaluation, an optimal hull form is searched for a given operation condition. Using this approach, the authors showed a novel application of ML in ship design and optimisation, which allows the creation of an extensive database and getting fast results through searching. Ao et al. (2021) advanced the state of the art by developing a DNN which uses a Fully Connected Neural Network technique to predict the total resistance of ship hulls in its initial design process based on control points of the CAD geometry. The flowchart of Ao et al.’s work is given in Fig. 10. The authors reported that the average error was lower than 4% when compared to CFD data. The high accuracy can be attributed to the fact that Ao et al. model relies on hundreds of control points on the CAD geometry as input whereas other models such as Yu and Wang (2018) only relied on principal parameters of the hull. Similarly, Abbas et al. (2022) developed a geometry-based DNN approach to link predict the wind resistance of ships, and the ML predicted results are highly close to CFD results. Nonetheless, in the three papers here, only resistance was considered. If this approach is coupled with other performance parameters such as stability and structural integrity, it has the potential to become a popular automatic ship design approach.

Fig. 10. Ship design and optimisation procedure of Ao et al. (2021).

Fig. 10. Ship design and optimisation procedure of Ao et al. (2021).