1. Introduction

Societies around the world rely on complex systems to deliver essential goods and services ranging from water, food, and energy to health, transportation, and communications. Global figures for these goods and services are staggering, and the demand for them is expected to grow in the future. For example, about 90% of the global population enjoyed access to at least basic drinking water services in 2017 [1], while nearly six billion people are expected to suffer from clean water scarcity by 2050 [2]. Developed countries have vast interconnected water infrastructure systems that deliver high-quality drinking water services to their population: For example, in England and Wales in the United Kingdom, there are more than 1000 water treatment works producing drinking water, with 346 000 km of water networks serving more than 26 million households [3], while in the Netherlands, drinking water networks stretch over 120 000 km and serve over eight million households [4]. The size, complexity, long life-cycle, and critical nature of the service provided by this infrastructure make the planning and management of these systems extremely difficult.

Over the last two decades, modern digital technology has evolved rapidly, and its applications have transformed society by improving the planning and management of complex systems in, for example, the banking, transportation, marketing, entertainment, and tourism sectors, to name but a few. By taking advantage of largescale and widespread data acquisition, cloud computing with increasingly sophisticated forecasting capabilities, and global connectivity through the Internet and the Internet of Things (IoT), many novel applications have been developed and implemented. For example, the digital transformation of retail banking has led to huge changes in how people transact and organize their finances by using online and mobile tools. Similarly, digital technology has allowed organizations such as Uber to establish a new business model and, by doing so, disrupt the transportation industry. Various other organizations including Google, Amazon, Airbnb, Netflix, and Apple have also taken advantage of digital technology in a number of sectors. Two sectors—namely, the aviation and car industries—are particularly interesting from the standpoint of digital technology, not only because safety is of paramount importance in these sectors, but also because they have made the most significant strides toward full automation. Although enormous effort and investment have gone into these systems in order to enable them to perform actions alone, most of the systems must still interact with humans who supervise the work, direct and oversee system performance, or collaborate with the systems [5].

However, the introduction of digital technology and the drive for increased automation have also contributed to several high-profile failures related to how these technological advances have been used. Examples include the case of Tesla Autopilot being unable to prevent several car collisions [6] and the case of the Boeing 737 MAX and its Maneuvering Characteristics Augmentation System(MCAS) software, which is suspected to have played a role in two aircraft crashes with significant loss of life [7]. By identifying what went wrong with the new technology in such cases, it should be possible to learn from these events and prevent future failures.

It is not surprising that, following digital technology adoption in various areas of human activity, the water sector has begun to benefit from digital transformation [9], [10]. Advances in the field of hydroinformatics and, most notably, the application of artificial intelligence (AI), have started to make their mark on the water sector and its management [9], [10], [11], [12], [13], [14]. However, digitalization progress in the water sector is lagging behind other areas due to several challenges, including legacy data collection and management systems, difficulties in establishing a clear business case for digital applications, cybersecurity issues, and the “human factor” [9].

Over the last two decades, “forensic engineering” has made substantial strides in the application of engineering principles and analytic methods to the investigation of failures of or other performance problems with engineering systems [15], [16]. A forensic investigation aims to identify the root cause of a failure, and may thereby prevent similar failures in the future. Early examples of forensic engineering include investigations of failures that led to important changes in bridge design, construction, and inspection, such as the Tay Bridge disaster of 1879 [16] and the Tacoma Narrows Bridge collapse of 1940 [17].

The aim of this paper is twofold: ① to identify key digital technology advances that have found application in the water sector; and ② to apply forensic engineering principles to failures that have been experienced in fields that are further ahead on the journey toward digital transformation and automation, in cases where a digital component played a significant role. The objective is to learn from those mistakes and avoid repeating them in the water sector.

2. Advances in water sector digitalization

2.1. Smart irrigation

Making up 70% of water use worldwide, agriculture irrigation is the largest user of water and plays an important role in food security [18]. In many areas of the world, intensive flood irrigation and groundwater pumping for crop irrigation deplete aquifers beyond their natural recharge levels, thus affecting water security. Therefore, there is an urgent need to use digital technology to inform more efficient use of water resources and better decision-making. The adoption of digital technology will lead to smart irrigation systems that can provide the necessary amounts of water to crops by adjusting to local conditions, such as temperature, humidity, and soil moisture content.

One of the key digital advances in irrigation management is the use of remote-sensing data. Remote sensing refers to non-contact measurements of the radiation reflected or emitted from agricultural fields, and can be conducted by satellites, aircraft (both manned and unmanned systems), tractors, and hand-held sensors [19]. The proliferation of remote-sensing measurements leads to large quantities of data—the so-called big data—which creates a need for the capability to rapidly process and analyze massive amounts of highly variable data types in order to provide new insights [20]. Thanks to recent advances in remote sensing and AI, field-scale phenotypic information can be quantified accurately and integrated with big data to develop predictive and prescriptive management tools [21].

A common application of remote sensing is to estimate crop water requirements. These applications are based on predictive models, which compute crop water requirements by applying algorithms based on various types of data, energy balance, aerodynamics, and radiation physics [22]. The models use a combination of data types, including data from satellite images (e.g., surface temperature, surface albedo, and vegetation index), data from weather sensors (e.g., air temperature, wind speed, and humidity), and geographical data (e.g., digital elevation, and land cover). One example of this approach is the FruitLook service, which is an online tool that uses satellite and geographical data to help fruit and wine farmers in the Western Cape of South Africa to optimize water use and improve productivity [23].

Almost half of the producers using the FruitLook service reported cutting their water use by at least 10%, while some reported a 30% reduction in water consumption [23]. With 700 regular users, including producers, consultants, and researchers who are benefitting from the data available on approximately nine million hectares of the Western Cape [24], the FruitLook system is actively used to improve the management of crop production.

2.2. Smart urban water

The global population living in urban settlements is expected to increase to 70% of 9.7 billion by 2050 [2]. In addition to urbanization, population growth, climate change, and resource constraints create enormous challenges to urban infrastructure planning and management, including water and wastewater services. Urban water systems are generating an increasing volume of sensing data due to the proliferation of heterogeneous sensors and widespread data acquisition. Dealing with the inevitable data explosion requires data analyticsand new AI methods to harness, explain, and exploit the structural and dynamic characteristics of the data in networked, interconnected, and inherently complex water systems [25].

Originally restricted to scientific circles [26], [27], [28], hydroinformatics and digital water tools have found their way to the world of water utility [9]. It is beyond the scope of this paper to list all of these tools, but some mature technologies are introduced here, including the use of machine learning (ML) for anomaly detection in buried infrastructure, nature-inspired optimization tools for the planning and management of water infrastructure systems, and digital twins.

2.2.1. Machine learning for anomaly detection in water distribution systems

Reducing non-revenue water—that is, water that has been abstracted, treated, and pumped into distribution, but not delivered and billed to the customer (i.e., “lost water”)—is one of the key drivers for water utilities worldwide. However, given the size and complexity of water networks and the fact that they are mostly located below ground, it is extremely difficult to identify where these water losses occur. As part of a comprehensive event management system, the UK water company United Utilities has invested in an event detection tool to provide near-real-time, actionable alerts for events such as leaks, bursts, pressure or flow anomalies, and sensor faults or telemetry problems [29].

The anomaly detection system combines several self-learning AI and ML techniques along with statistical data analysis tools to automatically process the pressure and flow sensor data collected in the network every 15 min [30]. The data is then used by an artificial neural network (ANN) to forecast near-future values, which are analyzed and compared with new observations in order to detect any significant deviations. Statistical process control techniques are also used for the analysis of pressure and flow deviations from the predicted signals. The gathered evidence is then fed through a Bayesian network to analyze the evidence for multiple event occurrence [30]. This process provides an estimate of the likelihood of event occurrence and feeds into the detection alarm mechanisms. The system uses historical events to improve the detection of future events through a set of self-learning methodologies. Most importantly, the event detection system does not require the use of a hydraulic model of the water network.

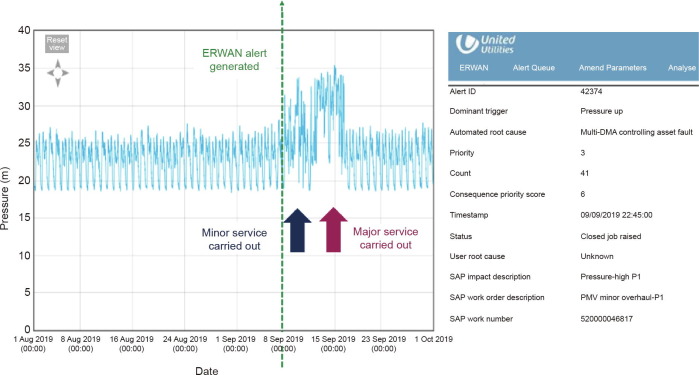

Fig. 1 shows how the output of the event detection system has been integrated into the interface of the Event Recognition in the Water Network (ERWAN) system at United Utilities. In the case shown here, the anomaly has been identified and two interventions have been arranged (minor and major service), which have prevented more serious failures in the network resulting from a significant increase in pressure. The ERWAN system has been used operationally across United Utilities’ networks since 2015 and has proven to be robust and scalable. It processes data from over 7500 pressure and flow sensors every 15 min and detects events such as pipe bursts and leaks in a timely and reliable manner with high true and low false alarm rates [29].

Fig. 1. An ERWAN system alert indicating a sudden pressure increase, likely due to a faulty pressure-reducing valve [29]. DMA: district metered areas.

Fig. 1. An ERWAN system alert indicating a sudden pressure increase, likely due to a faulty pressure-reducing valve [29]. DMA: district metered areas.2.2.2. Image processing and machine learning for fault classification in wastewater systems

Wastewater infrastructure must be regularly inspected in order to plan for maintenance or replacement. As such inspection is normally expensive and time-consuming, only a relatively small proportion of a wastewater system is inspected using closed-circuit television (CCTV). Myrans et al. [31] presented a methodology that can automatically identify a wide range of fault types in sewers using image processing and ML applied to raw CCTV footage. The procedure applies a random forest ML technique to identify the fault type in sewer systems. This innovative methodology has been validated and demonstrated on the CCTV footage collected by a UK water company. The results show a peak accuracy of 74% when applied to a real-world sewer system.

2.2.3. Cellular automata systems for flood management applications

Surface-water flooding occurs when rainfall overcomes drainage systems, which cannot fully convey water during intense precipitation. Modern mapping technologies, such as synthetic aperture radar, aerial digital photogrammetry, and light detection and ranging (LIDAR), offer significant improvements in data availability and accuracy for new, much-needed flood modeling applications in urban areas [32]. However, common simulation tools are unable to deal efficiently and effectively with flood modeling over large urban areas, which is now made possible by the widespread availability of high-resolution digital elevation models. In particular, computational efficiency is an issue when models are used in flood risk assessment or uncertainty and mitigation analyses, for which repeated simulation runs are required [33]. Fast flood modeling for largescale problems has been achieved by a two-dimensional cellular automata model by Ref. [34]. Through the use of the massive parallel computational power of graphics processing units (GPUs) and cellular automata, these scholars achieved an order of magnitude increase in processing speed when applying the new model to benchmark and real-world case studies.

2.2.4. Nature-inspired optimization

The use of nature-inspired algorithms in the water sector has been well documented [28], [29]. These optimization algorithms have found application in various fields, from the design of rehabilitation planning [35] to the optimal system operation of urban water infrastructure [36]. The advent of nature-inspired computing in the late 1980s and early 1990s, with the initial successes of evolutionary computing algorithms, swarm intelligence, and ANN, resulted in more complex urban water infrastructure problems being tackled. Contributions in the field have progressed from an economic-driven single-objective framework to multi-objective models that do not result in a single solution, but rather in a set of tradeoff solutions, typically referred to as non-dominated or Pareto-optimal solutions [36].

An interesting application of nature-inspired algorithms to water network management is to determine the optimal location of pressure sensors and flow meters for leak detection and localization. A practical application of this approach to a real network in the Netherlands has been reported by Ref. [37]. The multi-objective optimization explored the tradeoff between the minimization of the number of sensors to be installed and the maximization of detection coverage. The network in question was selected as a pilot to determine the optimal number and location of up to 20 pressure sensors to complement the existing 10 sensors, in order to maximize the likelihood of leakage detection and the extent of the network covered by these sensors. For each additional sensor, the results obtained showed the optimal location and coverage in terms of customer connections, the percentage of leaks detected, and the pipe length of the network covered by the sensors. At the extreme of all 20 new sensors being installed, a comparison with the original 10 sensors showed that the coverage in terms of customer connections would grow from 11 411 (26.5% coverage) to 22 967 (53.2%), the percentage of leaks that could be detected would improve from 26.2% to 48.5%, and the pipe coverage in terms of pipe length would increase from 236.75 km (25.1%) to 415.55 km (44%). These findings are in line with the outcomes of the design challenge titled “Battle of the Water Sensor Networks (BWSN),” which demonstrated the advantages of using multi-objective optimization for sensor placement in water distribution networks [38].

2.2.5. Digital twins

Digital twins can be used as decision-support tools for operational water system management, and are increasingly finding applications in the water sector. A digital twin is a virtual digital copy of a real system that is continuously updated with data to mimic the system’s past, present, and future behavior [39]. Application of the digital twin concept to a water supply system serving a population of 1.6 million in the city of Valencia, Spain, showed how a digital twin could be used to simulate various network operating conditions. The challenges of creating a digital twin of a network consisting of 113 000 pipes and numerous other elements (e.g., eight reservoirs, 28 tanks, 47 pumps, 259 regulating valves, 48 500 manual valves, 4600 hydrants, 118 000 service connections, 97 flowmeters, and 470 pressure gauges) are considerable [39]. Due to the size of this large and complex system, the authors had to create a strategic model of about 10 000 pipes (about 10% of the original size) in order to create a computationally efficient digital twin. The outcome was encouraging, as with only 600 sensor measurements (of pressure, flow, and levels), it was possible to gain a real-time understanding of the behavior of the water supply system in a 10 000-node strategic model.

Digital twin developments open up a new avenue for a rich, immersive, and playful modeling experience that can engage various stakeholders through serious gaming [40] and augmented, virtual, or mixed augmented/virtual reality [8]. Through these technologies, different stakeholders (e.g., operational and planning staff, users, and regulators) can learn about the complex behavior of water systems, experiment safely using a digital twin, understand different and often conflicting points of view, and develop strategies for finding more sustainable solutions for complex systems.

2.2.6. Robotics

Robots with sensing and AI capabilities are increasingly used in manufacturing and in hazardous situations where human life can be endangered, such as search-and-rescue missions and military operations. Robots can also perform some human tasks faster and much more consistently and accurately than humans, which has raised the prospect of job losses in various industries, and particularly in manufacturing. In the water sector, robotic devices have found application in water quality modeling via autonomous underwater and surface-water vehicles [41]. Such robots can provide better spatial coverage of water quality data in large areas, at various depths, and in real time. Another area in which robotic devices are seen as an important research and application area is the structural condition of underground water assets. Due to their age, pipes are prone to faults (e.g., leaks, breaks, blockages, and collapses) but are difficult to inspect. Pipe condition can normally be inspected by nondestructive means using CCTV cameras or by destructive methods, such as removing a short pipe section for inspection. However, these are costly procedures that can cause service interruptions, and only a small percentage of the network can be inspected using them. Robots can provide a systematic condition assessment of underground pipes to support asset management planning [42]. Increasingly, the use of robotic devices for the inspection of buried pipelines, such as autonomous and pervasive “swarm” robots, has captured the imagination of both the research community and water asset managers [43]. It is only a matter of time before the first commercial implementation of such devices will occur.

3. Forensic analysis of failures involving digital solutions and automation in transportation

3.1. Autonomous cars

Since the first challenge by the Defense Advanced Research Projects Agency (DARPA) in 2004, there has been a great deal of interest in research and industry in autonomous or “self-driving” cars. Nowadays, it is more accurate to call the software controlling such vehicles driver-assistance systems (DASs), as they help drivers by acting autonomously or alerting them to potential problems and avoiding collisions, but cannot be considered to be fully automated. Tesla is the leading producer of plug-in electric cars. The software in Tesla cars—the so-called Tesla Autopilot—can match speed with traffic conditions, keep within a lane, change lanes, transition from one road to another, exit the road when the destination is near, self-park when near a parking spot, and be summoned to and from the user’s garage [44].

However, the rapid development of self-driving cars has also resulted in a few high-profile collisions involving cars trialed by Tesla, Google, and Uber, which are the most prominent companies trialing DASs. Some of those collisions resulted in fatal outcomes. A number of the collisions occurred due to the DAS being unable to recognize a stationary obstacle on the road (e.g., when a Tesla car collided with a parked fire truck), to understand and give the right of way to other vehicles, or to detect and avoid pedestrians. However, in almost all of the accidents, the DAS was engaged and the driver was not paying attention to the road. Similarly, another factor that might be contributing to the risk of collision is the limits to the technology being used to feed data into DASs. For example, the standard combination of sensors includes LIDAR systems (laser-based radar systems that can create detailed maps of roads), radio detection and ranging (RADAR) systems (which detect distant objects and their velocities), and high-resolution cameras that acquire visual information (e.g., traffic signs or whether a traffic light is red or green). Tesla cars, for example, rely on camera and RADAR to provide environmental information to the DAS, but do not use a LIDAR system. This may or may not have contributed to the collisions experienced by the Tesla cars. Finally, because theirs is not a fully autonomous self-driving system, Tesla warns drivers that they are ultimately responsible for the vehicle’s behavior on the road.

3.2. Airplane autopilot system

With more than 50 years of service and over 15 000 planes sold, the Boeing 737 is the best-selling aircraft in the world. Its upgrade, the 737 MAX, which has larger, more fuel-efficient engines and updated avionics, has a longer range and a lower operating cost. The upgraded aircraft was expected to have enough in common with previous models to not need additional lengthy certification, and it was anticipated that pilots would be able to operate it without the simulator training required for new aircraft. Since the aircraft first entered service in 2017, the 737 MAX became Boeing’s fastest-selling airliner of all time, with 5000 orders from over 100 airlines worldwide [45].

However, two fatal crashes of the 737 MAX in 2018 and 2019, within five months of each other, which killed a combined 346 passengers, led to questions about the safety of the 737 MAX and, subsequently, to the worldwide grounding of the aircraft in March of 2019 [44]. In both crashes, the aircraft was gaining altitude shortly after takeoff, while the pilots tried to maintain the upward angle in order to gain altitude at the required rate [46]. One of the key reasons that led to the crashes was the aircraft design choices, which included the use of larger engines that were positioned further forward and higher up on the wing than on the predecessor aircraft. The new engine size and position changed the aerodynamics of the plane and created the possibility that the aircraft nose could pitch upward in some situations, such as during low-speed flight after takeoff, when the plane was being flown manually [45]. Such an upward pitch increased the risk of causing the aircraft to stall. The two accidents happened shortly after takeoff, while the aircraft was gaining altitude, as the onboard software (MCAS) repeatedly engaged and forced the aircraft to nosedive [46]. This finding pointed to a potential flaw in MCAS, which was designed to automatically activate and stabilize the aircraft by nudging its nose back down. The situation was complicated due to MCAS being designed to use information from only one of the two angle-of-attack sensors, which made it vulnerable to erroneous sensor readings. A further contributing factor in both crashes was that the pilots were not fully aware—or may not have been told at all—of MCAS’s existence and of how the system functioned.