1. Introduction

Detecting objects and responding to a physical change of them is what we mean by sensing. For example, in smart homes when someone moves through a room, the sensor senses the changes in infrared and activates the alarm system. Sensor has the ability to interact to its environment after collecting and processing information. Information could be stored in the device or shared with other devices.

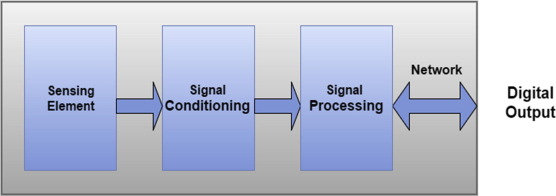

Distributing sensors in a network has the same advantages of the traditional computer network such as sharing information, increasing performance, availability, reliability and protecting the system from failure. Using more than one sensor for detecting an object increases signal-to-noise ratio “SNR” which means high level of desired signal to the background noise, and improves opportunities for line of sight. Distributing sensors could be wired or wireless [1]. Fig. 1 illustrates components of smart sensor.

Fig. 1. Smart sensor configuration.

Fig. 1. Smart sensor configuration.Wireless sensor network is a set of sensors or nodes connected to each other through waves. Being tiny, inexpensive, power saving besides the continuous developing in wireless communication are motivating factors behind the massive increase in deploying wireless sensor applications in monitoring patients, measuring changes in environmental phenomena, detecting enemies and guarding homes. Wireless sensors networks are applied in a variety of fields where circumstances are complicated like transportation and industrial applications, precision agriculture and animal tracking, environmental monitoring, security and surveillance, health care, smart building and energy control systems. Although the development in wireless sensor networks they still face many challenges such as Power consumption, data storage, data aggregation, time synchronization, radio interference and localization [1].

In most of applications sensors usually detect or monitor objects and send information to central node or even to a neighbor node. Being not aware of the position of the sensing object makes the information meaningless. Deploying GPS in each node is expensive and power consuming. Also, GPS is based on the direct connection from sensor to the satellite which makes it bad choice for indoor or undergrounded [2]. Determining the position of nodes in WSN is a must for many applications. Tracking objects requires firstly, identifying the coordinates of sensor nodes. Depending on a protocol for routing-based localization techniques instead of routing discovery saves energy indeed [1], [2], [3]. Moreover determining position of nodes could be a good factor in preventing attacks to the network and improving security. Also, node localization helps in measuring the range of network coverage. Addressing nodes and creating routing table in sensor network depend on locating nodes. Node localization is used for coverage, routing, target tracking, monitoring environmental phenomena like temperature, pressure, humidity, animal’s habitat, agriculture, underwater, health care like monitoring patients and medical staff, traffic, disaster areas like minefields [2].

2. Problem formulation

Wireless sensors are randomly deployed in monitoring or tracking environments. The position of the sensor itself may be known or not. In order to get the required information about the target environment, we should identify the location of each node in the network precisely. The localization process as shown in Fig. 2 is divided into two phases, the first one is known as ranging or estimating phase where each node estimates its distance from neighbor node depending on time of arrival of signal or strength of signal. The second phase uses the estimated distance to get the position of the node depending on some equations or optimized algorithms.

Fig. 2. localization process schema.

Fig. 2. localization process schema.Assuming a randomly deployed network consists of number of predefined position sensors (Beacons) B expressed in two dimensional coordinates and a set of sensors with unknown locations N. In order to calculate the distance between unknown node and a beacon node, Euclidean equation could be formulated as shown in Eq. (1).(1)where

-

i ∈ B

-

j ∈ N

-

xi, yi are coordinates of beacon node i.

-

xj, yj are coordinates of unknown node j.

According to many factors in the surrounding environment there will be a difference between calculated locations and real locations. The estimation error could be calculated as shown in Eq. (2).(2)where

-

is the estimated distance.

-

is the real distance.

-

is the number of network nodes.

The objective of any localization algorithm is to minimize the error between calculated and real position [2], [3], [4].

3. Classification of measurement techniques

Localization can be classified according to many aspects such as the distribution of the calculation in the network which can be done all centralized in one node or distributed from one node to another. Also, sensors may be static in the network or mobile. Location may be calculated in absolute coordinates or relatively to reference nodes in the network. Algorithms may also adapt two or three dimensions coordinates. The most common classification is to classify the phase of estimating distance according to range based or range free techniques [5], [6], [7], [8], [9], [10], [11], [12], [13], [14]. According to pervious description, a localization taxonomy is introduced in this paper as shown in Fig. 3.

Fig. 3. Localization taxonomy (measurement techniques).

Fig. 3. Localization taxonomy (measurement techniques).Range free and range based algorithms are the most known algorithms used in node localization. They present variety scope of characteristics where Received Signal Strength Indicator is the cheapest one. Centroid is the least power consumption technique. Here we introduce an overview about these two types of measurements.

3.1. Range based algorithms

Range based algorithms adapt point to point distance or angle to calculate node position. However, they require additional hardware in estimating phase of localization. Three different techniques based on range based are decried below.

3.1.1. Angle-of-arrival

Angle-of-arrival (AOA) technique depends on measuring the difference in direction of a single radio wave received by many antennas. AOA method suffers from multipath and shadowing problem and may cause large localization errorin case of small error in measurement. In order to accomplish acceptable accuracy with this method large antenna arrays should be used which means additional hardware and power consumption so, it has little attention from researchers to be developed and optimized and not feasible in real applications [3], [4], [5], [6].

3.1.2. Time difference of arrival

In Time Difference of Arrival (TDOA), difference between arrival times of a signal received by two receivers and by knowing the speed of medium distance between sender and receiver nodes is to be calculated. Accuracy is affected by temperature and humidity beside synchronization error. It gives good accuracy when senders and receivers node are calibrated well before sending. It is less suitable for low power sensor network devices [4], [7], [8].

3.1.3. Received Signal Strength Indicator

Received Signal Strength Indicator (RSSI) measurements rely on strength of received signal to estimate distance between nodes. RSSI is a cheap technique with little hardware devices however, its performance of estimation is affected by the multipath propagation of radio signals such as reflection, refraction and diffraction [3].

3.2. Range free algorithms

In range free algorithms there is no need for distance calculation only uses network information like number of hops in estimation phase of localization. In the following sections, different schemes of range free are presented.

3.2.1. Centroid scheme

Center of geometric shape is determined by the mean of all points formed the shape. Many algorithms depend on coordinates of anchor nodes and center of shape to estimate location of unknown nodes [3], [4], [5].(3)where N is the number of sensors, are the coordinates of unknown nodes.

Centroid scheme is considered the least accurate range free algorithm however, its performance remains independent of node density. Also, it has the smallest communication overhead and easy to be implemented [5].

3.2.1.1. Weighted centroid localization

Jan Blumenthal et al. [14] proposed a mathematical model for improving and optimizing node localization. Firstly, beacons send a message of their position for all nodes in the network. All nodes estimate their position depending on centroid determination. Weighted Centroid Localization (WCL) uses weight to improve calculations where the weight is a function depending on the distance and the characteristics of the sensor node’s receivers. WCL is simple algorithm with no additional hardware but it is dependent on communication range of the nodes and achieving the optimal range required is unrealistic.

3.2.2. Approximate point-in-triangulation test

Approximate point-in-triangulation test (APIT) is area-based range-free localization scheme. Estimating position in this algorithm depends on dividing network into triangular regions between anchor nodes. Unknown node chooses three anchor nodes from where it has received a message, and then it tests if it is inside or outside triangle formed by the three anchors. This process is repeated with different three anchors till all possible anchor triangles are formed or the desired accuracy is achieved then centroid of the intersection of the entire triangle of that node is calculated [15]. APIT algorithm overcomes the other range free algorithms when applied in an irregular sensing shape with random node placement, and low communication overhead is desired. However, it requires large number of anchors [5].

3.2.3. Distance vector hop scheme

Based on [16] distance vector-hop (DV-Hop) looked like traditional vector distance routing protocol it depended on counting the number of hops to determine the path from node to node. In the first step of algorithm anchor nodes broadcasted a message to neighbor nodes with initialized hop count, then each node receives message and increment the counter. Nodes with higher counter where usually ignored. At the end of this step each node in the network should have the minimum number of hops to each anchor node and anchor node was able to estimate an average size for one hop. Nodes received hop size then multiplied the hop-size by the hop-count value to derive the physical distance to the anchor.(4)where (xi,yi), (xj,yj) are coordinates of anchor i and anchor j, hij is the hops between anchor i and anchor j.

DV-hop is a simple and inexpensive algorithm of localization. However, due to its low degree of accuracy it is kept under research for optimizing its efficiency [17], [18], [19].

3.2.4. Amorphous

Amorphous algorithm seems similar to DV-Hop algorithm however, it takes density network into account through the calculation then estimates the position depending on triangulation centroid determination [13], [14].

4. Testbeds

Applying algorithms to localize nodes in wireless networks requires a lot of sensors which may cost a lot of money. Simulation tools and mathematical models were good and cheap alternatives for researchers. However, lack of accuracy and researchers desire of applying their protocols in realistic or semi realistic environments make testbeds another alternative. Many universities are offering realistic projects consisting of number of sensors distributed in different locations and opened for researchers to apply their protocols and techniques in order to get better results under real conditions. Generally, testbeds provide much functionality such as forming a network of large number of wireless sensors distributed in different locations, allowing remote access for users to manage nodes, allowing user to submit his algorithm and apply it on selected nodes and providing readable results for user.

For example, SensLAB is a large scale network of wireless sensors project enable researchers to test their applications freely. IoT-LAB testbeds are located at six different sites across France which gives forward access to 2728 wireless sensors nodes [20].

WISBED is a joint project between nine European universities in order to provide heterogeneous large scale network of wireless sensors to be used in research purpose. Different hardware, software, algorithms, and data form a good environment for any researcher to test and develop his algorithm in different quality and dynamic location [21].

MoteLab is a public web based open source project implemented in Apache and PHP and developed by Electrical and Computer Engineering department of Harvard University. Accessible users are able to upload their executable files and apply them on motes or nodes they have chosen previously [22], [23].

CitySense is a network of sensors distributed over buildings and streetlights in Cambridge, Massachusetts, USA. It consists of Linux PCs embedded devices with 802.11a/b/g [24].

Collected dataset is an alternative for real world experiments is to use data collected from real nodes such as Intel Lab Data [25] and crawdad [26].

5. Key performance indicators of localization techniques

Localization problem is attraction point of research in the last years. Different algorithms have been applied to solve this problem. In order to validate new algorithm, researchers need to evaluate the performance of the proposed algorithm against the state of the art. Moreover, estimating different algorithms according to standard criteria helps researchers and users to apply the suitable algorithm which meets the needs and constraints of the environment where the sensors are deployed. Some metrics need to be discussed. To distinguish between theses algorithms and estimate the best one for each application [6], [7], [8], [9], [10], [11], [12], [13].

5.1. Accuracy

The main purpose of localization algorithms is to define the position of nodes. The more the estimated locations are close to the real locations, the more the used algorithm is considered successful and effective, this is known as Localization accuracy.

Accuracy of algorithm is defined as mentioned before in Eq. (2). However, accuracy should also take number of nodes into accounts so, the average of localization error is used to estimate the accuracy level of the applied algorithm. The average of localization error is calculated as the following equation:(5)where , are the real coordinates of nodes and are the estimated coordinates of nodes and is the number of unknown nodes.

5.2. Scalability and autonomy

Scalability and Autonomy also, describes the ability to expand network and increase number of nodes which has become a permanent requirement and is considered as an important character for estimating any system. Autonomy is also related to scalability by evaluating the ability of that continuous growing system to operate automatically without interventions of human or with no dependency on central point.

5.3. Cost

Cost maybe evaluated in many aspects such as time spent in installation and localization, money, hardware, energy, communication overhead, number of required beacons or computational efforts. The main target of any algorithm is to minimize cost and maximize the accuracy. In the real applications researchers and users are restricted by the tradeoff between cost and accuracy as described in the following examples:

Anchor to node ratio: increasing the number of beacons of the network improves the accuracy of the localization however, using GPS for predefining beacons position is expensive and consume energy.

Communication overhead: It’s known that communication is the most power consuming resource in the network. Minimizing communication overhead saves energy however, scaling the network is affecting the overall communication overhead.

Convergence time: represents the time taken to localize each node in the network. Time is affected by network size and its ability to be expanded.

5.4. Coverage

Many algorithms depend on number of neighbor nodes Network and Coverage to estimate position of specific node. Density of network and nodes capability of connecting to each other has influence on distinguishing algorithms as well. To estimate localization algorithm according to coverage; the algorithm should be tested on different number of beacons, different network sizes or different communication range.

5.5. Power consumption

Power consumption is one of the challenges of WSN in general, which has a lot of researches in addressing and managing it. Managing power usage increases network life time and measures the efficiency of localization technique. The average energy setup is used as a metric to estimate power consumed in localization process and it could be calculated as in Eq. (6)(6)where is the initial available energy on node ,is the remaining energy on node after setting the algorithm and is the number of unknown nodes.

Based on metrics of evaluating different algorithms of localization, table 1summarizes a comparison between Range Based (RB) and Range Free (RF) techniques.

Table 1. Comparisons between Range Based (RB) and Range Free (RF) localization techniques.

| Algorithm type | Algorithm | Accuracy | Scalability | Cost | Power consumption |

|---|---|---|---|---|---|

| RB | AOA | High | Difficult | High | High |

| TDOA | High | Difficult | High | High | |

| RSSI | High | Difficult | Cheapest | High | |

| RF | Centroid | Low | Easy | Low | Low |

| APIT | Good | Easy | Low | High | |

| DV-Hop | Low | Easy | Low | High | |

| Amorphous | Low | Easy | Low | High | |

6. Optimization based on artificial intelligence

Lack of accuracy in distance measurements is the motivation for most of the researchers to suggest deploying intelligent methods in localization. Seeking for the optimum solution or the best path is the remarkable character of Artificial Intelligence (AI) algorithms that proposed accurate localization of nodes in the network.

6.1. Swarm intelligence

Swarm intelligence (SI) is a form of evolutionary computation which is a form of stochastic optimization search. Most studies [27], [28], [29] defined SI as the collective behavior of decentralized, self-organized systems. It simulates the behavior of birds flocking, fish schooling, ant’s colony or bacterial growth. The most important approaches are ant colony optimization (ACO) which is based on ant colony and particle swarm optimization (PSO) which is based on bird flocking. SI works in two phases coarse and fine. In Coarse phase unknown nodes roughly estimates their position through a number of iterations depending on anchor nodes then, continue in fine phase where estimations are optimized and improved. Each step of estimation distance then positioning nodes is repeated until a minimum fitness value is reached and a better position of a node is located.

6.1.1. Particle Swarm Optimization

Particle Swarm Optimization (PSO) is a population-based, stochastic optimization algorithm. This method adapts iteration to reach optimum solution. Mainly it assumes particles moves in a population looking for the best solution. Applying PSO in localization supposes the solution space of the optimizer maps to the physical space where we want to find the position of unknown nodes. By gathering information from anchor nodes and by measuring distance objective function is formed. Changing in velocity and position of particle in PSO for each iteration of the algorithm improves the solution and gives the best position of the unknown node. Dama et al. [28]described the localization based on single stage of localization and proposed a multi stage localization algorithm based on PSO to overcome the problem of localizing unknown nodes with small anchor neighbor nodes. It considered the unknown nodes localized in the one phase of localization as the anchor nodes to the unknown nodes of the next stages of localization. Solving localization problem by PSO by considering space distance constraint as the fitness function and obtain the global optimal solution as the final location is not realistic. The constraint of space distance is really considered, on contrary the geometric topology constraint is not; because of ranging errors in solving the practical issues. To achieve the both goals Ziwen et al. [29], proposed a multi-objective PSO algorithm where two objective functions are considered one of them is the traditional optimization for the real and measured position and the other for the network topology. Another improvement to PSO algorithms [30] was depending on Min-Max method to determine the bounds of the surrounded area of each unknown node. The bounds are used later in the PSO objective function and improve the convergence of the swarm solution to the localization problem. Applying the two methods made the best use of low computational cost of Min-Max method and improved its error ratio by applying PSO to optimize the results as well as it depended on lower number of anchor nodes.

6.2. Genetic algorithms

The concept “soft computing” is associated with fuzzy and complex systems with uncertain parameters. One of the most known branches of soft computing is evolutionary computation which is a set of methodologies and algorithms that adapt try and error technique to find an optimum solution to complex problems. Genetic algorithms (GA) are the most frequently encountered type of evolutionary algorithms. They represent search heuristic methods inspired by biological evolution which simulate survival of the fittest among individuals over sequent generation for solving a problem. GA initially defines a population or search space then randomly select the first generation which is a possible solution to the problem then apply a fitness function for it and gives a measure of the solution quality and crossover and mutation operators to create a new generation which will be used as a parent in the next iteration and so on till finding the fittest or the best solution to the problem.

Bo Peng et al. [31] introduced GA to solve localization problem, after the step of estimating the distance by the minimum hop count and hop size in the original DV-Hop they defined a population of region of each unknown node and generated initial population in the feasible region then they evaluate the fitness function and apply GA operators to improve the accuracy. They used an arithmetic crossover operator that defined a linear combination of two chromosomes. The mutation operator performed a random move to the neighbor in the population feasible region. The simulation results showed that their proposed method improved location accuracy in comparison with the original DV-Hop algorithm as well as GA-based method proposed by [32]without any additional hardware support. Improvements in DV-Hop algorithm are still under research as mentioned in [33] where they decreased localization error by improving Shuffled Frog Leaping Algorithm (SFLA) algorithm used in [34] by keeping SFLA in the second step of the localization and replacing PSO used in the third step by hybrid GA-PSO.

6.3. Fuzzy logic

Fuzzy logic (FL) is a multi-logic valued technique to define a value even as a true or false. The definition may not be absolute true or false but also could be a degree of truth or fault. It is suitable to uncertain or approximate reasoning. In FL everything is a matter of degree or membership. FL simulates the ability of human mind in reasoning something. Where mind receive huge amount of information with the capability of discarding most of information and concentrating on the most important and related information to it the desired task [35], [36], [37], [38]. Because it is cheap fast, flexible, easy to understand and able to match any set of input output systems; fuzzy logic based algorithms are proposed recently by [39] to reduce localization errors in traditional algorithms Received Signal Strength (RSS) as described in Fig. 4. Fatma Kiraz etal. [40] explored the fuzzy ring overlapping range free (FRORF) localization technique which overcame the uncertainty issues of RSS but suffered from non-convexity (multiple stable local minima) issues. They developed a methodology that contained fuzzy Ring Overlapping Range Free (FRORF) framework then generating convex fuzzy sets based on the radical axis notion that resulted in a significant performance and better accuracy compared to the FRORF. Noura Baccar et al. [41] in their review about using Fuzzy Logic Type 1 (FLT1) in optimizing RSSI localization algorithm; they stated that FLT1 resulted in magnificent localization accuracy percentage 95% in zones level. However, in rooms level it didn’t have the same percentage of accuracy. They proposed applying Fuzzy Logic Type 2 FLT2 which showed high accuracy in solving problems with low probability in determining exact membership degrees. In the first stage of their method, RSS inaccurate measurements are optimized by interval type 2 fuzzy logic algorithm then the linguistic rules are built.inwhere the online phase RSSI are fuzzified using type 2 then aggregated through inference engine then type is reduced to fuzzy type1. Finally, difuzzification is done to estimate the locations of nodes. Applying type 2 algorithm showed better accuracy over type 1 on both rooms and zones levels and showed lower uncertainties.

Fig. 4. Fuzzy logic algorithm proposed by [39].

Fig. 4. Fuzzy logic algorithm proposed by [39].6.4. Neural networks

Neural Networks (NN) mimic the biological nervous systems which are represented as a network of interconnections between nodes called “neurons” with activation functions. NN system is usually used to process large number of unknown inputs. NN is classified into two phases learning then testing. Errors of the system adapted by NN could be corrected by feed backing the output of the previous phase to the next one [42].

Ali Shareef et al. [43] proposed a technique based on NN to estimate the position of a mobile node. They distributed three beacon nodes in a triangular shape and used the RSS to measure the distance between the mobile node and each beacon node in the floor. Weights are obtained after training according to different classes of NN such as Multi-Layer Perceptrons (MLP), Radial Basis Function (RBF), and the Recurrent Neural Networks (RNN). Authors made a comparative analysis between traditional two variants of the Kalman Filter and the three algorithms of NN. They concluded that NN generally, are more accurate than Kalman methods. Although, MLP was lower accurate than RBF but, MLP was considered the best choice when taking into account computational and memory requirements.

Nazish et al. [44] developed MLPNN based on Bayesian Regularization and Gradient Descent algorithms to train the data of two inputs RSSI and Link Quality Indicator (LQI) to estimate the position of mobile nodes. In their proposed model they used LQI which had a good correlation with distance as a second input parameter to enhance the poor measurements of RSSI to optimize the position estimation of mobile nodes. They overcome the problems of convergence and stability by offline training and achieved position accuracy of 1.33 m. However, variant in RSSI measurements in indoor environments could affect the accuracy of the algorithms depended on offline training. Moreover, training MLP took much time and certainly, affected the processor power of sensors.

Based on Generalized Regression Neural Network (GRNN) and weighted centroid Shaifur et al. [45] proposed a model of two stages to estimate the positions of nodes. In the first stage; RSSI measurements of beacon nodes are used to train two GRNNs one for X coordinates and the other for Y coordinates which resulted in approximate positions for nodes. In the second stage; WCL is used to determine the exact position depending on the neighbor beacon nodes. To avoid inaccuracy of the off line training applied in all previous algorithms; GRNN are trained in real time. They also, used GRNN instead of MLP to consume less time in online training and less power of sensors.

Li et al. [46] composed a wireless network by connecting smart phones with phones without GPS through Bluetooth. They assumed a constrained equation to identify the maximum distance between two mobiles and limited it by the Bluetooth signal strength. The second equation is to assume the maximum entropy where all phones are distributed in a space. By solving the two equations by Recurrent Neural Networks (RNN); location of each phone could be found. In their proposed model they could reduce the number of required beacons used in previous methods and also, reduce the communication burden which made the network scalable in both indoor and outdoor environments.

Ashish Payal et al. [47] showed that localization of unknown nodes could be optimized by tuning and optimizing hardware circuits through evaluating the performance of thirteen feed forward artificial neural networks (FFANNs) training algorithms. They concluded that Bayesian regularization algorithm and Levenberg-Marquardt algorithm gave the most accurate results compared to the other algorithms.

6.5. Deep learning

Deep Learning (DL) is a promising machine learning research technique. It is about representing and training data. Deep Learning is already used in many fields such as computer visions, speech recognition and bioinformatics. Recently, many researches apply it in node localization.

Zhang et al [48] built a positioning system depending on Deep Neural Networkas described in Fig. 5. They developed a four-layer Deep NN to extract features from RSS measurements and build fingerprints database in offline stage then, to give a coarse estimation of the position in online stage. Results are refined using Hidden Marvok Model (HMM) based on the sequential information. Applying two stages consisted of offline and online training solved the problem of variant RSSIs caused by signal fluctuating over the time and obstacles. The ability to extract the reliable features from the massive fingerprint database gave DL approach superiority of all other shallow learning algorithms which are limited in their modeling and representational power in handling massive amounts of training data.

Fig. 5. Deep neural network model.

Fig. 5. Deep neural network model.Phoemphon et.al [49] integrated Fuzzy Logic extreme learning machine (ELM) in order to achieve high accuracy and overcome node density and signal coverage problems which affect the efficiency of all localization algorithms. They developed a model where signal was normalized during Fuzzy process and error approximation was adjusted by applying deep learning concept. The model is promising and achieved higher accuracy than the other soft computing algorithms and also, exploited the advantage of the two algorithms in each stage where FL is more accurate in low density and network coverage and ELM is on contrast. However, authors believed that more analysis should be done under realistic conditions as future work.

7. Future search directions

From previous presented survey, we can summarize future work directions as follows. Regarding Accuracy, percentage of error in traditional methods is high and needs to be reduced, while power consumption minimization requires design of an algorithm that works efficiently to save energy in wireless sensor networks.

Due to the complexity of technique additional hardware may be added which affects the cost. Many methods require high processing to determine the reference nodes which mean high cost. Most algorithms are applied in 2-D space so; implementing algorithms in 3-D space is still a good point for future researches.

In most realistic applications of WSN sensors are randomly scattered in the target environment such as volcanos so, the network is not uniformly distributes which causes remarkable deviation between the real distance and Euclidean calculated distance. Therefore, locating nodes in irregular shape of networks such as C-shaped, S-shaped, L-shaped, and O-shaped with acceptable level of accuracy is considered a challenge issue for localization algorithm.

Because of network coverage area and movement of nodes it’s hard to implement robust methods for positioning mobile nodes. Mobility of nodes is good in improving connectivity however, granting the coverage of mobile nodes all the time is an issue for localization algorithms.

Modeling obstacles existed in the real field is a blocking stone to find best coverage paths and needs more research.

Another open issue in localization is security where, wireless nodes may be attacked to get messages of positions themselves or to get information sent from node to another so, security should be considered in proposed algorithms.

8. Conclusion

Wireless Sensors Network represents an attractive research area for the last years and it is expected to continue for the next years because of its wide applications in our daily life. Localization is one of the challenges that face researchers in extending the usage of wireless sensors due to the high cost and indoor problems of Global Positioning System GPS. Over the years a lot of algorithms have been applied to replace GPS either depending on point to point distance Range based algorithms or depending on network information as in Range Free algorithms. Both of them introduce reasonable cost but still suffer from the problem of network coverage and look for the best management of power. The lack of accuracy of all traditional algorithms and the massive development in optimization techniques were the motivation for the researchers to find an accurate solution to the localization problem using soft computing techniques. This research gives an overview of Wireless Sensors Network applications and challenges and focuses on Localization problem. It classifies the algorithms used to solve the problem and identified the issues of them which represent a promising as well as challenging areas for future work. It introduces the recent remarkable techniques of soft computing used in improving the main issue of the localization which is accuracy. Localization is still a promising area to research, develop and optimize.