1. Introduction

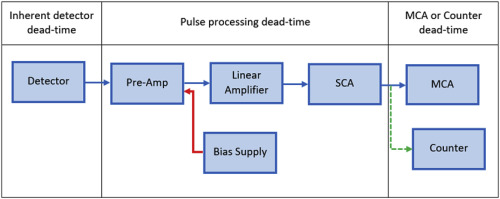

Pulse counting in a random process and is unavoidably affected by losses. In nearly all detector systems a minimum amount of time must separate two events so that they can be recorded as two separate independent events. In some cases, the limiting time is determined by processes in the detector itself but in most cases the limit arises from the associated electronics. The minimum time of separation for proper detection is usually called the deadtime (or resolving time) of the counting system [1]. The total deadtime of a detection system is the aggregate of the intrinsic detector deadtime (e.g., the drift time in a gas detector), the analog front end losses (e.g., the shaping time of a spectroscopy amplifier etc.), and the data acquisition deadtime, for example, the conversion time of ADCs (analog-to-digital converter), or the readout and storage times. Thus, there is a need for correction at three different levels; first, for the internal losses inherent in the detector itself, second, for the losses generated by the system circuitry, and lastly, for the multichannel analyzer, i.e. the analog to digital conversion and storage deadtime. It is possible to correct for counting losses only if both the nature of the originating process and the effects of deadtime are clearly understood. A typical pulse counting system is shown in Fig. 1, which also gives an overview of the deadtime associated with various units. In most detectors, a small pulse lasting for only a fraction of a microsecond is generated which is not strong enough to be processed directly. To preserve the information carried by these individual pulses they are first processed by a preamplifier which adds a relatively long (tens of microsecond) tail to the original pulse. It is important to point out that all the information pertaining to the timing and the amplitude of the original pulse is contained in the leading edge of the tailed pulse, which is then carried to an amplifier where it is amplified and shaped. Charge collection time of the detector determines the rise time of the tailed pulse produced by the preamplifier. Amplifier's shaping of the pulse plays a critical role in preserving the spectroscopic and timing (or count rate) information. A compromise between preserving the rate and the pulse height information is generally needed for any high count rate application.

Fig. 1. Sources of deadtime in a typical detection system.

Fig. 1. Sources of deadtime in a typical detection system.To avoid ballistic deficit, (ballistic deficit is the phenomenon when the slow component of the ions do not contribute to the pulse in the pulse shaping stage, hence pulse amplitude is reduced) the shaping time constant of the amplified pulse must be significantly larger than the rise time of the tailed pulse, otherwise the amplitude information of the shaped pulse will be compromised. Ballistic deficit is not a major concern as long as the charge collection time (and consequently the preamplifier's pulse rise time) is constant for all pulses. However, in most cases the charge collection time depends on the location of the initial interaction of the radiation within the detector. This leads to variable ballistic deficit and energy resolution degradation. On the other hand, if the pulse shaping time is too long the amplified pulse will carry either a positive tail or a negative undershoot. Both of these will lead to pile-up and energy resolution degradation. The other situation where pile-up becomes an issue is when pulses with flat top arrive too close to each other producing a combined pulse of summed amplitude. This situation is referred as the peak pile-up (Fig. 2). The basic difference between the deadtime and the pile-up is the fact that, in pile-up a summed pulse is produced when two pulses combine leading to energy resolution degradation as well as count rate loss. In case of deadtime, the second pulse is lost without any energy degradation of the first pulse. In the literature both these problem are often not precisely presented leaving confusion for the readers.

Fig. 2. Pulse pile-up leading to addition of high energy wings to the spectroscopy peak due to pulse superposition.

Fig. 2. Pulse pile-up leading to addition of high energy wings to the spectroscopy peak due to pulse superposition.In most high count rate situation both deadtime as well as pile-up problems are possible. As Evans [2] pointed out that every detection instrument used for counting exhibits a characteristic time constant resembling the recovery time. He noted that after recording one pulse, the counter is unresponsive for successive pulses until a time interval equal to or greater than its deadtime, , has elapsed. He found that if the interval between two true events is shorter than the resolving time , only the first event is recorded. Therefore, strictly speaking Evans was making reference to resolving time and in the discussion did not precisely represents deadtime. Thus the detection system causes a loss of counts and distorts the energy resolution. Evans very elegantly explains that the radiation counter systems do not actually count the nuclear events but the intervals between such events. This is a unique way of looking at the counting process in a detection system.

Fig. 1 shows a typical counting system where each unit can possibly add some deadtime and contribute towards the overall deadtime of the system leading to count loss. Identification of contribution from individual pulse processing unit to the overall deadtime helps in recognizing the bottleneck areas of the counting system and devising measures to correct the count losses accordingly. The relative contribution of each component can significantly vary depending on the component design and operating conditions.

The detector is first unit in the counting system. As discussed in Section 2, depending on the choice of detector, a wide variation of detector deadtime is observed. For Geiger-Müller (GM) counters, the detector deadtime contribution is perhaps the most significant within the entire counting system. Detector pulses are only a fraction of a microsecond wide. However, for extremely high count rates (exceeding million counts per second) it is possible that these pulses may ride on each other and may lead to the pile-up problem. In most cases however, deadtime is the primary concern arising from a detector. In some cases the detector is able to produce pulses at much faster rate than the subsequent instrumentation can process and in such cases the circuitry and pulse processing instrumentation determines the overall deadtime of the system.

The next unit in the counting system is the preamplifier, which is used to provide optimized coupling and electronic matching between the detector and amplifier. Its main purpose however, is to maximize the signal to noise ratio. The pulses from a preamplifier are long tail pulses with very short rise time and a fall time of tens of microsecond. In the shaping stage of the pulse processing; that is the amplifier, hence the problem of pile-up introduced in the preamplifier is removed. However, at very high count rates some piled-up pulses may reach the preamplifier saturation limit. This situation results in the degradation of energy resolution.

The amplifier, which is the next component in pulse processing, is perhaps the most important component in the counting system. Its primary function is to shape the tail pulse coming from the preamplifier and further amplify it, as required. The tail pulses are converted to linear amplified pulse within the expected range of the subsequent units in the counting system, usually between 0 and 10 V. As discussed earlier, the shape of the amplified pulse plays a critical role in minimizing pile-up and ballistic deficit to preserve the energy resolution as well as the count rate information. In addition to the pile-up, deadtime is also associated with the amplified pulse, which is of the same order as the width of the shaped pulse that is only a few microseconds. It is important to point out that invariably a compromise is to be made to preserve the energy resolution of the pulses and the count rate information under high count rate conditions.

The SCA (Single Channel Analyzer), when used, is not a major contributor to the deadtime problem of the counting system. A total of 1–2 μs deadtime is added because of their functioning. Modern counters are the other unit in counting chain, having even smaller deadtime associated with them. For example, ORTEC 994 [3] counter reports a deadtime for paired pulses of 10–40 ns. Hence the deadtime contribution from the counter is not at all significant.

MCA or Multi Channel Analyzer is the next major contributor to the deadtime in the counting system. Based on their design, MCA's can produce pile-up and/or deadtime problem. The main contributor in MCA deadtime is the ADC or Analog to Digital Converter. The deadtime of a Wilkinson type ADC is linearly dependent on the pulse height. In most modern MCAs, the system is capable of automatically correcting for the deadtime by counting for a longer duration of time (live time) than the clock time. It is however important to point out the auto correction at this level is only limited to the deadtime associated with the MCA. MCA electronics is not capable of de-convoluting two or more piled-up pulses or correcting for the deadtime initiated in the detector.

Muller [4] has complied a very comprehensive bibliography on radiation measurement system's deadtime covering almost all significant contributions to the area from 1913 till 1981. He has listed various articles in this area in chronological order of appearance and also according to the subject area. The present work differs in its approach, as the main goal of this paper is to gather some of the major findings on deadtime and pile-up behavior, which includes common modeling methods and measurement techniques for the interested user. This article is by no means an exhaustive bibliography of all contribution in area of detector deadtime and pile-up associated with measurement system. Rather, the authors have tried to present most prevalent and useful methods in this area.

In the next section, the physical phenomenon responsible for deadtime in various detectors is discussed, followed by major mathematical models for describing system deadtime in Section 3. Section 4 reviews the common methods for measuring the system's deadtime followed by a section devoted to deadtime and pile-up measurement of electronic instrumentation.

2. Physical phenomenon of deadtime

Detector design, geometry and material can significantly impact the phenomenon of deadtime and pulse pile-up. In addition to the operating conditions including the high voltage applied to a gas filled or semiconductor detector, operating temperature and pressure pay a significant role in detector deadtime [5], [6]. This section discusses the physical nature of phenomenon of deadtime and pile-up in major radiation detector types. It is important for the end-users to understand the physics of the phenomenon to effectively reduce the effect of deadtime and correct for it.

2.1. Gas filled detectors (Geiger Muller and proportional counters)

In a gas filled detector, when an electron-ion pair is produced (say in a G-M tube) by radiation, the electrons are accelerated toward the anode creating a cascade of secondary ionization leading to what is called as Townsend avalanches [1]. In G-M counter this avalanche propagates along the anode wire at the rate of approximately 2–4 cm/μs [7] and eventually envelopes the entire anode. Collection of all the negative charge results in the formation of the initial pulse, which lasts for a few microseconds. However, the exact duration of the pulse will depend on the geometric dimensions of the counter, location of the initial ionization, as well as the operating voltage, temperature and gas pressure [5]. Obviously one would also expect the inherent nature of the fill gas (work function) impacting the charge collection time. G-M counter does not provide any spectroscopic information therefore one is not concerned about pile-up, that is another event taking place during the charge collection time resulting in pulse height resolution degradation. In theory, all pulses from G-M counter are of the same amplitude irrespective of the energy of radiation initiating them.

Although electrons are collected at the anode rather quickly, positive ions tend to wander longer around the anode due to their low mobility before being collected at the cathode. Presence of positive charge results in severe distortion of the electric field. Any subsequent event during the time when the electric field is distorted will either produce no pulse at all or produce a pulse with reduced amplitude, which may or may not be detected by the subsequent counting system. Therefore G-M counters are prone to deadtime count losses. Duration of deadtime will again depend on the detector geometry, fill gas properties and operating condition of pressure, temperature and most importantly the applied voltage [5].

Fig. 3 illustrates the deadtime, resolving time and recovery time of a G-M tube. These three terms are unfortunately used interchangeably causing some confusion for the readers. As discussed earlier, the positive ions slowly drift toward the cathode; consequently the space charge becomes dilute. There is a minimum electric field necessary to collect the negative charge and produce any pulse in the tube. By strict definition, deadtime is the time required for the electric field to recover to a level such that a second pulse of any size can be produced. Just after the deadtime, the electric field gradually recovers during this time the amplitude of the second pulse is hampered by the presence of the lingering positive charge. Therefore immediately after the deadtime, if a second pulse is produced its amplitude will be reduced.

Fig. 3. (a) Typical gas-filled detector behavior with distinct regions of operation (b) Deadtime representation of a Geiger Mueller Counter.

Fig. 3. (a) Typical gas-filled detector behavior with distinct regions of operation (b) Deadtime representation of a Geiger Mueller Counter.There is minimum amplitude needed for the second pulse for it to pass through the discrimination threshold and be recorded. The time needed between the two pulses to produce this minimum amplitude recordable second pulse is called the resolving time of the detection system. Since the true deadtime is impossible to measure, resolving time is often referred to as deadtime of the G-M counter. Finally, after complete recombination of the gas in the Geiger tube a full amplitude pulse can be produced. The minimum time required to produce a full amplitude pulse is called as the recovery time of the detection system. Typically, the deadtime for a GM detector is on the order of hundreds of microseconds [1].

In proportional counters the avalanche is local, i.e. not engulfing the entire anode wire. The production of initial ion pair and its subsequent multiplication is proportional to the initial energy deposited in the fill gas. Therefore the energy spectroscopy information of the interaction is preserved. However, if a second event takes place within the charge collection time of the first interaction, the second pulse will be piled-up with the first pulse, producing a summed pulse degrading the energy resolution. Likewise, if the second event takes place before all positive ions are neutralized, the amplitude of the second pulse will be reduced, again leading to a degradation of the energy resolution. If the time gap is too small between the two pulses, the second pulse would be totally lost. Therefore for proportional counter it is more of a pile-up problem than that of deadtime. But depending on the time gap between the two events both pile-up and deadtime loss is possible.

2.2. Scintillation detectors

There are two major categories of scintillators; inorganic and organic scintillators. In the case of inorganic scintillator, the energy state of the crystal lattice structure is perturbed by radiation and elevates an electron from its valence band into the conduction band or activator sites when impurity is added (which is mostly the case) to the crystal by design. Subsequent return of electron from excited state to valence band produces light/photon emission (Fig. 4). Detailed discussion of scintillation process is beyond the scope of this review but interested readers are referred to the literature [8], [9].

Fig. 4. Energy band structure for Scintillator crystal.

Fig. 4. Energy band structure for Scintillator crystal.There is a finite time associated with excitation and de-excitation of the perturbed sites and in many cases the decay time is composed of more than one component. There is also a wide range of decay times. For example, NaI(Tl), the most commonly used scintillator material, has a decay time of approximately 230ns whereas some fast inorganic material such as BaF2 with a decay time of less than a nanosecond are also available. Presence of secondary de-excitation path (e.g., phosphorescence) can further complicate the phenomenon, producing light yield at much longer decay time. Researchers [10], [11] have reported large variability in the performance of inorganic scintillators. In case of scintillators, if the second interaction is within the decay of the first interaction, the light emission from the second interaction will add to light emission of the first event and can potentially produce a summed peak. Therefore, the problem lands in the realm of pulse pile-up. However, due to comparatively small decay times this becomes a problem only at significantly high count rates. In the case of organic scintillators the excitation is that of a single molecule (for noble gas scintillators like, Xe and Kr as a single atom) and the electron is promoted to higher energy level. De-excitation of these electrons produces the scintillation photons which are responsible for the pulse formation. Most organic scintillators have even smaller decay constant in the range of nano-seconds (e.g. Anthracene solvent has a decay time constant of only 3.68ns [12]) and are well suited for high intensity measurements. For scintillators, in general, the problem of deadtime/pile-up is not as important as compared to the G-M counters. For scintillator detectors, material characteristics play the most critical role in the detector performance. Minute amount of impurities can drastically alter detector performance including pile-up. One must bear in mind that presence of activators or waveshifter can drastically alter the deadtime behavior of any scintillation detector. Furthermore, additional deadtime or pile-up considerations are warranted in matching an appropriate photomultiplier tube or photo diodes. Light-to-pulse conversion process (by PMT or photo diodes) can also add a few nano-seconds of deadtime [13]. Proper choices of photomultiplier tube (PMT) electronics and operation conditions are required to optimize the system for high count rate applications.

2.3. Semiconductor detectors

Semiconductor detector operation is based on collection of charge carried by electron and holes, which are produced due to radiation interaction. A major advantage of semiconductor is its superior energy resolution because only a few eVs of deposited radiation energy is required to produce a pair of charge carrier (electron and holes) as compared to approximately 30 eV of radiation energy deposition to produce an ion pair in gas filled detector. High charge carrier production coupled with more than thousand times higher density as compared to gas filled detector results in favorable characteristics of semiconductor detectors. Unlike gas filled detectors where only electrons contribute to the signal, in semiconductor detectors the mobility of holes is comparable to that of electrons, and hence both charge carriers contribute to the pulse formation. The mobility of the electrons and holes depends on: material characteristics, strength of the electric field applied and operating conditions (temperature). For most cases the charge carrier mobility is on the order of 103–104 cm2/V-sec [14], [15]. Therefore for a typical semiconductor detector the charge collection time is just a fraction of a microsecond [1].

If a second event takes place before all the charge from the first event is collected, the charge carriers produced by the second event will be added to the pulse produced by the initial event, hence leading to the problem of pile-up. Since both the charge carriers are contributing to the formation of pulses there is no deadtime in the strict sense, and only pile-up is observed.

The shape of the pulse is dependent on the location of the initial interaction where electron-hole formation takes place, and the mobility of each charge carrier in the material at the operating voltage. Charge carrier mobility is also a strong function of detector temperature. Voltage applied to the p-n junction causes the depletion layer to grow and hence increases the active volume of the detector (Fig. 5). Therefore, detector geometry, operating voltage, and temperature all play important roles in pile-up time for a semiconductor detector.

Fig. 5. A p-n junction with reverse bias as a semiconductor detector.

Fig. 5. A p-n junction with reverse bias as a semiconductor detector.3. Deadtime models

Over the last sixty years many researchers have proposed models to correct for deadtime. These models rest on the assumption that a Poisson distribution exists at the input of a detector. In one of the earliest papers on this topic, Levert and Sheen [16], demonstrated that the frequency distribution of discharges counted by a Geiger-Muller counter is not necessarily a Poisson distribution. Rather it depends on the resolving time, which may be comparable to the observation interval.

3.1. Idealized deadtime model

Feller [17] and Evans [2] have developed the two basic types of idealized models for deadtime, i.e., type I or (nonparalyzable model) and type II (paralyzable model), respectively. The paralyzable detection system is unable to provide a second output pulse unless there is a time interval equal to at least the resolving time τ between the two successive true events. If a second event occurs before this time, then the resolving time extends by τ. Thus, the system experiences continued paralysis until an interval of at least τ lapses without a radiation event. This interval permits relaxation of the apparatus. Based on the interval distribution of radiation events, the fraction of those events which are longer than is given as , where n is the average number of true events per unit time. Product of this fraction with the true count rate provides the observed count rate:(1)

The nonparalyzable or the type I detector system is non-extending and is not affected by events which occur during its recovery time (deadtime), τ. Thus the apparatus is dead for a fixed time τ after each recorded event. If the observed counting rate is ‘m’, then the fraction of time during which the apparatus is dead is . And the fraction of time during which the apparatus is sensitive is . Thus, the fraction of true number of events that can be recorded is given as,(2)or(3)

For low count rates, both these models give virtually the same result, but their behavior is very different at higher rates (Fig. 6). The count loss in a paralyzable model is predicted to be much higher than in nonparalyzable model. As one can see, at extremely high count rates the paralyzable systems do not even record any counts, the deadtime just keeps extending.

Fig. 6. Paralyzable and nonparalyzable models of deadtime.

Fig. 6. Paralyzable and nonparalyzable models of deadtime.Feller [17] while proposing the idealized deadtime models pointed out that the actual counter behavior is somewhere between the two idealized cases. This can easily be shown by Taylor expansion of the paralyzing expression and by truncating after the first terms results in nonparalyzable expression. By retaining higher order terms, the result approaches that of a paralyzable model, as shown in Fig. 7. The first attempt to develop a generalized deadtime model was reported by Albert and Nelson [18]. Albert and Nelson's generalized approach is based on associating a probability ‘θ’ for detector getting paralyzed. The value of ‘θ’ can vary from 0 to 1. Thus, for a generalized deadtime model, only a fraction ‘θ’ of all incoming events are capable of triggering an extension of the deadtime. For the extreme case, θ = 1, the model approaches Type II (paralyzable). For the other extreme, θ = 0, the model becomes type I (nonparalyzable).

Fig. 7. Plot to show Taylor expansion of Paralyzable model.

Fig. 7. Plot to show Taylor expansion of Paralyzable model.3.2. Hybrid deadtime model

The major contribution in generalized approach for deadtime came from Takacs's [19] who was the first one to obtain Laplace transform of the interval density for generalized deadtime. Muller in a series of reports and publications [20], [21] further simplified the generalized model given by Takacs. The output (observed) count rate (m) for generalized deadtime can be expressed as:(4)where is the generalized deadtime and θ is the probability of paralysis. Another representation of this development is presented by Lee and Gardner [22] who made use of two independent deadtimes. The hybrid model proposed by them:(5)makes use of the paralyzable deadtime and the nonparalyzable deadtime . Using least square fitting of the data obtained from a decaying of Mn56 source, they obtained the two required deadtimes for a GM counter. While in their discussion the order of the two deadtimes was alluded, but not identified precisely. It appears that for G-M counter they placed a nonparalyzable deadtime before the paralyzable deadtime. They did not offer any justification for this order of the two deadtimes. Obviously, one would expect a significant change in the deadtime behavior if the order of the two deadtimes were reversed. The hybrid model is a good application of the generalization originally proposed by Albert and Nelson [18]. Lee and Gardner did not offer any practical method to determine the two deadtimes. Most recently Hou and Gardner proposed an improved version of the original two deadtimes model by further dividing paralyzing and non-paralyzing each into three components [23]. The approach seems to be producing improved results but it does add to the empirical nature of the solution. With the new proposed solution (their case 2) the user would need to know four deadtimes; , , and . This approach is similar to including additional terms from the Taylor expansion of the expression.

Patil and Usman [24] presented a graphical technique to obtain the two parameters for a generalized deadtime model using data from a fast decaying source. They offered a simple modification to the hybrid model [22] simplifying it back to a form to similar the original Takacs equation (equation (4)):(6)

In their paper, Patil and Usman [24], referred to the probability of paralyzing as the paralysis factor . Measurements were made to obtain the paralysis factor and the deadtime for an HPGe detector. Using the graphical technique they found the deadtime of 5–10 μs and the paralysis factor approaching unity. Yousaf and co-workers compared the behavior of the traditional dead-time models with recently proposed hybrid deadtime models [25]. They clearly demonstrated the inherent difference between the paralysis factor based models and the two deadtimes model. They concluded that use of a single deadtime model for a given detector under all operating conditions is not advisable let alone using one model for all detectors for all operating conditions. Therefore, one must carefully examine the applicability of deadtime model for the given operating condition. Their conclusion highlighted the need for additional work in the area of deadtime modeling and count rate correction. Hasegawa and co-workers [26] proposed a technique of measuring higher count rates based on the system clock. Realizing that in some parts of the data acquisition system processing is performed on fixed system clock. Latching or buffering system is used to retain system information temporally to synchronize output event with the system clock. This latching capability allows the system to measure more counts than the standard nonparalyzable model. Their system has the ability to record one event per system cycle irrespective of the timing of the arrival of the true events. Unless there are no true events, one event is recorded per system cycle. In this manner, the system is able to record more events than the nonparalyzable system. Based on Poisson distribution of the input count rate the on clock nonparalyzable count loss model's observed count rate is expressed by,(7)

For a fast system clock there can be significant improvement in the counting efficiency by relying on the system clock.

4. Detector system deadtime measurement and correction methods

One of the simplest methods of estimating the overall deadtime of a counting system is the two-source method originally developed by Moon [27] and later incorporated in the work of other researchers [28]. Two-source method is based on observing the counting rate from two sources individually and in combination. Because the counting losses are nonlinear, the observed rate of the combined sources will be less than the sum of rates when the two sources observed individually and the deadtime can be calculated from the difference.

The advantage of the two-source method is that it uses observed data to predict the deadtime. Because the two-source method is essentially based on observation of the difference between two nearly equal, large numbers, careful measurements are required to get reliable values for the deadtime.

Repeating well-defined geometry is necessary to measure deadtime using two-source method which might be difficult in some situations. A dummy source is often used to replicate the exact geometry when counting the sources individually. Likewise if the background is not negligible the algebraic expression for the deadtime is little more involved. It is also important to point out that in order to achieve good measurements counting statistics must also be incorporated in the experiment. In some cases scattering from surroundings may also influence the measurements.

The decaying source is another commonly used method for measuring overall deadtime of detection system [1]. This technique, which requires a short lived radioisotope, is based on the known behavior of a decaying source where the true count rate varies as:(8)where is the background count rate, is the true rate at the beginning of the measurement and is the decay constant of the particular isotope. Assuming negligible background and substituting (8) in the expression for the non-paralyzable model, one obtains:(9)

If is plotted as the abscissa and as the ordinate the slope of the straight line so obtained would be . The initial true rate (often unknown) can be obtained by finding the intercept of the straight line with the y-axis (Fig. 8). Finally the deadtime is calculated by taking the ratio of the slope () with the intercept (). Similar procedure can be carried out for the paralyzable model, where the abscissa is taken to be and the ordinate is taken to be . In this case, the slope again is be and the intercept is . One can use the information to estimate the deadtime.

Fig. 8. Decaying source method for (a) nonparalyzable and (b) paralyzable model.

Fig. 8. Decaying source method for (a) nonparalyzable and (b) paralyzable model.The decaying source method has the advantage of not only measuring the value of deadtime, but also testing the validity of the idealized assumption of paralyzable and nonparalyzable models. However, care must be taken in selecting a suitable isotope. The isotope used for this technique must be pure with a single half-life, which is not too long or too short such that the entire counting rate range can be measured in a reasonable time. Moreover, the half-life of the decaying isotope must be known with good accurately. A disadvantage of decaying source method is that it takes a long time for deadtime determination. Yi and coworkers recently applied the decaying source method for calibrating dose rate meters [29] and reported good success.

Another variation of the decaying source method could be when a constant source is measured at various distances from the detector. However the distance between the source and the detector must be measured accurately because of the 1/r2 dependence of the observed count rate and inaccuracies in the distance measurement will be squared. For low intensity measurements the distances is usually not very long consequently the geometric variability (assumption of point source-point detector is no longer valid) may also contribute to the overall quality of the results. Scattering from the surroundings or even the air between the source and the detector can also complicate the measurements. This is particularly true for high energy sources where the scattering interactions could be complex.

Patil and Usman [24] contributed to the effort by proposing a modified decaying source method to measure the two detector parameters i.e., the deadtime and the paralysis factor of the detector system. The detection system consisted of the radiation detector, preamplifier, amplifier and multichannel scaler. HPGe detector was tested using a short lived isotope (Mn56 and V52). A multi-channel scaler with zero dead-time was used to collect the decay statistics. The plot below (Fig. 9) shows the characteristic rise and fall behavior as the source decays away.