Keywords

1. Introduction

As defined by the National Science Foundation (NSF)1, cyberlearning is: “the use of networked computing and communications technologies to support learning” [1]. Based on the current knowledge about how people learn, cyberlearning research can be defined as the study of how new technologies can be used to advance learning and facilitate learning experiences in ways that were never possible before. Of course, it is impossible to study cyberlearning without the use of technology itself [2]. The best way researchers have found to investigate potential advances is to design learning experiences and study them [3, 4, 5, 6, 7, 8]. This is our motivation in our work with SEP-CyLE.

The cyberlearning community report, the state of cyberlearning and the future of learning with technology [2], concludes that the major differences today from earlier research in the cyberlearning field is the usability, availability, and scalability of technologies used in cyberlearning. This report was organized by CIRCL (The Center for Innovative Research in Cyberlearning) and co-authored by 22 members of the U.S. cyberlearning community, including both computer and learning scientists.

The ISO 9241 report [9] defines usability as: “the extent to which a product can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use", where effectiveness is the accuracy and completeness with which users achieve specified goals; efficiency measures resources expended in relation to the accuracy and completeness of goals achieved; and satisfaction is the comfort and acceptability of the work system to its users and other people affected by its use [10]. While usability is essential to the success of any product design, the design's utility is also a major consideration in evaluating its quality. Usability and utility are closely related, however they are not identical. Based on Nielsen [11], utility is concerned with usefulness whereas usability includes not only utility, but also effectiveness, efficiency, and satisfaction.

To this end, this article focuses on usability and utility evaluations for cyberlearning environments used in computer science education. The selected cyberlearning environment for this research is a frequently used environment, called SEP-CyLE [12], currently used in several institutions in the USA by several researchers and learners [3, 4, 5, 7, 8, 13, 14, 15]. SEP-CyLE, Software Engineering and Programming Cyberlearning Environment, is an external web-based learning tool that helps instructors integrate software development concepts into their programming and software engineering courses. SEP-CyLE is used in this research since it provides a variety of collaborative learning settings containing learning materials of both programming and software testing concepts and tools. SEP-CyLE includes learning objects and tutorials on a variety of computer science and software engineering topics. In this experiment, we chose to use learning objects related to software testing. Our research aim is to investigate the utility of SEP-CyLE and evaluate the usability of its user interface based on the actual user experience.

1.1. Contributions

This article extends the previous work on integrating software testing concepts and tools into CS and SE courses to improve software testing knowledge of CS/SE students [4, 6, 7, 8, 16, 17]. This article builds upon that work by making the following contributions:

-

1.

A new UI/UX evaluation framework that is considered more appropriate to evaluate cyberlearning designs. The framework extends the current practice by focusing not only on cognitive aspects but also usefulness considerations that may influence cyberlearning usability. The framework uses four user studies; pre/posttests, heuristic evaluation, cognitive walkthrough, and UX survey.

-

2.

Using network analysis to measure the design accuracy of cyberlearning system from the usability and utility perspectives, and to identify students' interaction patterns and designs into STEM courses.

-

3.

Usability and utility recommendations and comments for future design of cyberlearning educational environments in CS and SE courses.

1.2. Problem definition

The number of users (students and instructors) of cyberlearning environments continues to grow [2, 18]. For example, the substantial growth in using SEP-CyLE in recent years is an evidence of the increasing importance of employing cyberlearning tools in the learning process. Consider that, as of summer 2017, the NSF has made approximately 280 cyberlearning research grant awards [2, 18, 19]. This is apparently another evidence of the emergent need for integrating technological advances that allow more personalized learning experiences among those not served well by the current educational practices. However, the usability evaluation of cyberlearning environments and their effectiveness are still an open research questions [20].

The process of evaluating the usability and the utility, for any given design, is not an easy task [21, 22]. Usefulness and effectiveness present significant challenges in evaluating the cyberlearning environment. Factors such as the significant increase in the cyberlearning technologies, identifying the cyberlearning users, and the context of the task provided by the cyberlearning environment impose additional difficulties. As Nielsen observed [21], if a given system cannot fulfill the user's needs (i.e., usefulness), then it is not important that the system is easy to use (i.e., effectiveness). Similarly, if the user interface is too difficult to use, then it is not important if the system can do what the user requires since the user cannot make it happen.

While there are many methods for the user to inspect a design's usability, one of the most valuable methods is to test using different usability tools such as the think-aloud protocol [23]. The commonality among all these methods that are based on having evaluators inspect a UI in the goal of finding usability problems in the system design. As mentioned by Nielsen, thinking aloud may be one of the most valuable usability engineering methods [24]. Nielsen also suggested that to study a system's utility, it is possible to use the same user research methods that improve usability [11].

Our goal in UI/UX evaluations of the SEP-CyLE tool is to help decide if SEP-CyLE fulfills the requirements of a well-designed cyberlearning environment for fundamental programming and software engineering courses by covering all important features of usability and utility. To accomplish this, we begin by understanding the key elements of a successful cyberlearning design, then building an evaluation framework that uses these elements to better understand the purpose of learning and how users learn. We hope that this understanding will be helpful in the future design of new technologies (cyberlearning-related) for these purposes and in their integration into cyberlearning environments to make computer education more meaningful and effective for a broad audience.

1.3. Article organization

The rest of the article is organized as follows. Section 2 introducing some background information on cyberlearning environments and SEP-CyLE. Section 3 describes the proposed cyberlearning evaluation framework. Section 4discusses the evaluation. Section 5 presents the evaluation results. Section 6discusses our takeaways and highlights from the data. We identify threats to validity in Section 7, and conclude and outline future work in Section 8.

2. Background and related work

This section provides the necessary background information on cyberlearning and SEP-CyLE cyberlearning environment. A complete details of the SEP-CyLE design and usage are described elsewhere [3], here we only provide an overview of the tool, the current design, and its main features that are available for its two types of users, i.e., instructors and students.

2.1. Cyberlearning

The cyberlearning definition produced in 2008 by NSF [1], as mentioned above in Section 1, focused on technologies that can be networked, to support communications between users. Before that, in 2005, Zia [25] during a presentation on game-based learning at the National Academy of Sciences2defined cyberlearning as “Education + Cyber-infrastructure". In 2013, Montfort [26] defined cyberlearning as any form of learning that is facilitated by the use of technology in such a way that changes the learner's access to and interaction with information. Other works considered cyberlearning as a modern twist on e-learning [27, 28]. While e-learning focuses on the transmission of information via a digital platform, cyberlearning uses a digital platform to establish a comprehensive, encompassing, technology-based learning experience, where students derive their understanding, and thus learning [29].

Essentially, the primary goal of both cyberlearning and e-learning is to provide learning experiences via a technology-based platform. The major difference lies in how such learning experiences are provided. For example, cyberlearning can help learners in their learning activities in a way that is effective and efficient using advanced electronic technologies. Collaborative tools, gamification, and virtual environments all are examples of cyberlearning technologies that are transforming education. These technologies can be used effectively by delivering appropriate learning contents and services that fulfill user needs in a usable manner [20]. Thus, cyberlearning extends e-learning by providing effective learning initiatives. It is apparent that cyberlearning has alternative definitions in the literature, and each definition emphasizes separate aspects.

Usability is a necessary condition for online learning success. According to several experts in the field [21, 30], “usability is often the most neglected aspect of websites, yet in many respects it is the most important". If the designed cyberlearning environment is difficult to use and fails to state what the environment offers and what users can do, then users simply leave. That is, users are not going to spend much time trying to figure out an interface. In order to improve usability, the most basic and useful method is to conduct usability testing that includes three major components: representative users, representative tasks, and user's observation [11].

Usability assessments in the literature have been conducted using different methods and for different purposes. Nevertheless, there is no universal or standard technique or method upon which usability evaluators agree [20]. However, various evaluation techniques are used to support different purposes or types. For example, in other work, the evaluators used analytical evaluation techniques (i.e., heuristic evaluation [21], cognitive walkthrough [31], keystroke-level analysis [32]) to determine usability problems during the design process. And they have used empirical evaluation techniques (i.e., formal usability testing and questionnaires) to determine actual measures of efficiency, effectiveness and user satisfaction [30].

2.2. SEP-CyLE cyberlearning environment

SEP-CyLE was developed by Clarke et al. [3] at Florida International University as a cyberlearning environment called Web-Based Repository of Testing Tutorials (WReSTT) [6, 16]. Its major goal was to improve the testing knowledge of CS/SE students by providing a set of learning objects (LOs) and tutorials to satisfy the learning objectives. These LOs and the corresponding tutorials are categorized sequentially based upon the difficulty level. Subsequently, WReSTT has evolved into a collaborative learning environment (now called SEP-CyLE) that includes social networking features such as the ability to award virtual points for student social interaction about testing. These are called virtual pointssince an instructor may choose not to use these as a part of students’ grades, but only for the motivation that collaborative learning and gamification may provide.

SEP-CyLE, current version of WReSTT used in this article, is a configurable learning and engagement cyberlearning environment that contains a growing amount of digital learning content in many STEM areas. Currently, the learning content primarily focuses on software engineering and programming courses. Unlike other e-learning management systems, SEP-CyLE uses embedded learning and engagement strategies (ELESs) to involve students more deeply in the learning process. These ELESs are considered to be cyberlearning technologies and currently include collaborative learning, gamification, problem-based learning and social interaction. The learning content in SEP-CyLE is packaged in the form of LOs and Tutorials. LOs are chunks of multimedia learning content that should take the learners between five to fifteen minutes to complete and contain both a practice (formative) assessment and a second summative assessment. The collaborative learning features allow students to upload their user profile, gain virtual points after completing a learning object, post comments on the discussion board, and monitor the activities of peers.

The choice of learning objects used in a given course is based on the level of the course, the course learning outcomes, and instructor preferences. A variety of learning objects and tutorials are available in SEP-CyLE. For example, SEP-CyLE can be used by instructors in both undergraduate and graduate courses by assigning students the learning contents with appropriate levels of difficulties. Note that all the students involved in the experiment described in this article were undergraduate students.

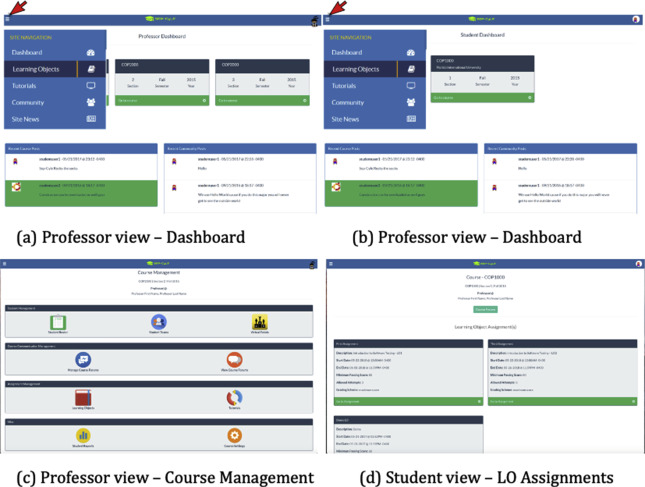

2.2.1. Student and instructor views

SEP-CyLE provides two views: one for instructors and one for students, as shown in Figure 1. As we can see in Figure 1 (a), SEP-CyLE allows the capability for an instructor to create a course module by enrolling students into the course and providing students with access credentials for using SEP-CyLE. By creating a course and using the course management page, as shown in Figure 1 (c), the instructor can 1) upload the class roster; 2) create unique login credential for the students; 3) assign students to virtual teams; 4) describe the rubric for the allocation of virtual points for different student activities; 5) create student reports; and 6) browse and assign several learning objects and testing tool tutorials.

Figure 1. SEP-CyLE's Professor view and Student view.

Figure 1. SEP-CyLE's Professor view and Student view.As shown in Figure 1 (b), students can create a user profile by uploading a profile picture (and gain some virtual points), browse the testing tutorials, complete assigned learning objects by passing with at least 80% on assigned quizzes (and gain virtual points), watch tutorial videos on the different testing tools (e.g., JUnit, JDepend, EMMA, CPPU, Cobertura), interact with other students in the class via testing based discussions (and gain virtual points for relevant discussions), and monitor the activity stream for whole class. These features are illustrated in Figure 1 (d).

2.2.2. SEP-CyLE UX survey

The SEP-CyLE survey we have used hade a total of 25 questions. These questions aimed at receiving students’ reflections, and are divided into three categories. Table 1 shows 14 questions that are related to the overall reaction to SEP-CyLE, Table 2 shows 6 questions related to the testing concepts, and finally, Table 3shows 5 questions that are related to the collaborative learning.

Table 1. Overall reaction to SEP-CyLE.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Table 2. Testing related questions.

|

|

|

|

|

|

Table 3. Collaborative learning-related questions.

|

|

|

|

|

3. Proposed cyberlearning evaluation framework

This section presents our work in evaluating SEP-CyLE's usability and validates its effectiveness. We present our proposed framework to evaluate cyberlearning environments in general and SEP-CyLE environment, specifically.

We conduct our evaluation through four user studies using a pre/posttest instruments, a heuristic evaluation, cognitive walkthroughs with a think-aloud protocol, and SEP-CyLE's UX survey. We preferred not to use the heuristic method of evaluation alone, since it sometimes found usability problems without providing suggestions for how to solve them [21]. The heuristic evaluation is used as a starting point to troubleshoot, and the thinking-aloud allows us to identify the potential SEP-CyLE improvements and gain insights into how users interact with the cyberlearning environments, thus benefiting the field as a whole.

3.1. Research objectives and questions

The objective of our evaluation is twofold. First, we want to measure SEP-CyLE's utility by measuring whether it satisfies the user needs by studying how SEP-CyLE's supporting materials affect the software testing knowledge acquisition by students. The second objective is to measure SEP-CyLE's usability and its ease of use. Together, these objectives lead to two primary research questions that this study addresses:

-

•

RQ1: Does SEP-CyLE meet utility requirements? This question will help us measure the impact of using SEP-CyLE on the software testing knowledge gained by the students.

-

•

RQ2: Does SEP-CyLE meet usability requirements? This question will help us measure the SEP-CyLE's ease of use and engaging features.

As shown in our proposed evaluation framework in Figure 2, a pre/posttest instrument, students' final scores, and SEP-CyLE UX survey were used to address the first research question (RQ1). We asked users to perform a heuristic evaluation and a think-aloud protocol to address the second research question (RQ2). Finally, we applied graph-theoretic analysis over the heuristic evaluation questions to study the insights into the students’ perceptions of these questions.

Figure 2. Concepts and attributes of our UI/UX evaluation framework of a cyberlearning enviroment - SEP-CyLE is used as a case study.

Figure 2. Concepts and attributes of our UI/UX evaluation framework of a cyberlearning enviroment - SEP-CyLE is used as a case study.3.2. Evaluation framework design

Assessing the UI/UX design quality of a cyberlearning environment first requires understanding the key elements of any cyberlearning design. There is some variability in the definition of cyberlearning. This disagreement reflects the underlying differences in understanding the purpose of learning and how people learn. Consequently, evaluating cyberlearning tools is still problematic for researchers [26]. We rely on the following definitions of cyberlearning, usability, and utility. Cyberlearning: “the use of networked computing and communications technologies to support learning" [1]. Usability: “the effectiveness, efficiency, and satisfaction with which specified users achieve specified goals in particular environments". And Utility of an object: “how practical and useful it is"[11]. We drew on the literature from several fields to develop a framework for evaluating the UI/UX of SEP-CyLE cyberlearning environment, and cyberlearning in general. Our framework identifies key concepts of cyberlearning and the important attributes associated with each concept. As shown in Figure 2, Technologies, for example, are considered one of the five important concepts in designing and evaluating a cyberlearning environment. Therefore, we chose those technologies implemented in SEP-CyLE to conduct our evaluation. The evaluation model has five primary concepts, as follows:

-

•

Context: to evaluate the utility of a cyberlearning environment, the context in which it is used is important. For example, SEP-CyLE was designed to support the students' learning in the software development process, specifically, software testing concepts, techniques and tools.

-

•

Users: a cyberlearning environment is valuable to the extent that there are users that value the cyberlearning technologies and services, thus providing the purpose for it to exist. In SEP-CyLE, students and instructors are the two user classes. In our work, both types of users are involved in the evaluation process; however students are the major source of data collection for evaluation.

-

•

Technology: in our evaluation process, we try to understand how users learn with technology, and how technology can facilitate this learning. The data used for evaluation is collected in a way that is related to the specified technology used in the cyberlearning environment, such as collaborative learning and gamification. The collaborative learning component in SEP-CyLE is based mainly on students' involvement, cooperation, and teamwork factors as defined by Smith et al. [33].

SEP-CyLE achieved those factors by rewarding students with virtual points, requiring collaborative participation between team members, and providing social engagement opportunities. For more details about the collaborative learning strategies provided by SEP-CyLE, please refer to [5].

The other cyberlearning technology used in SEP-CyLE and measured by our evaluation is gamification. The game mechanics implemented and used in SEP-CyLE [5], are participant points, participation levels, and leader boards. These mechanics were adapted from the work established by Li et al. [34].

-

•

Utility: in order to evaluate the usefulness of the used technology, we analyzed the collected data that is related to the technology itself. The analysis process considers the user satisfaction and the user needs of using that technology to understand the specified context. For example, we used the SEP-CyLE UX survey to measure the user satisfaction in the collaborative learning component and its effectiveness in the learning process. Another measure of the usefulness of SEP-CyLE in the learning achievements is the mapping of pre/posttest score to the corresponding student's final course grade.

-

•

Usability: we measure how easy a particular technology is to use by specific class of users in a specified context to advance learning. For example, we used heuristic evaluation and cognitive walkthoughs to measure the subjective and the objective values of collaborative learning and problem-based (task-based) learning, respectively.

These concepts point to the activities and the processes in the evaluation model as shown in Figure 2. The concepts are interrelated, and we believe that for a cyberlearning environment to be successful it should address all of them.

3.3. Subjects and courses

Within the context of the interactive educational environment, it is essential that feedback is elicited from real users [35]. Additionally, when the analytical evaluations are used, it is recommended that the evaluation is performed by heuristic evaluation and cognitive walkthrough experts [21], since this type of evaluation requires knowledge and experience to apply effectively. In our study, we chose students as the subject of our studies since the SEP-CyLE actual users are students. Although we do not have access to heuristic evaluation and cognitive walkthrough professionals, to carry out these assessments, we have used students in a UI/UX course who have been trained in these techniques as part of the course content.

As shown in Table 4, the course used for subjects and assessors in this study is a 200-level (sophomore-level) software engineering undergraduate course for UI/UX design. Students from two sections of this course in Fall 2017 were used to conduct these assessments. In order to reduce bias, the participants in the first section, Section A, were asked to perform cognitive walkthroughs, and the participants in the second section, Section B, were asked to complete both heuristic survey and SEP-CyLE UX survey. Students in Section B studied the usability principles during the UI/UX course before they completed the heuristic evaluation and the UX survey at the end of this course. They reviewed the SEP-CyLE website's interfaces and compared them against accepted usability principles that they had learned from the course content.

Table 4. Participating subjects.

| Class | Enrollment | Pre/Posttest | Cognitive Walkthrough | Heuristic & SEP-CyLE Surveys | |||

|---|---|---|---|---|---|---|---|

| Part.a | %b | Part.a | %b | Part.a | %b | ||

| 212A∗ | 37 | 27 | 73.0 | 20 | 54.1 | -- | -- |

| 212B∗∗ | 32 | 24 | 75.0 | -- | -- | 25 | 78.1 |

- 51 participants in the pre/posttest study.

- 45 participants in the Cognitive walkthrough, heuristic evaluation, and SEP-CyLE's UX survey.

- ∗

-

Section A is considered as a control group from the pre/posttest perspective.

- ∗∗

-

Section B is considered as a treatment group from the pre/posttest perspective.

- a

-

Part. = Participation.

- b

-

% = Percentage.

In addition to all these data, the pre/postest instrument was used to assess the SEP-CyLE's utility. That is, the software testing knowledge gained by the students after their exposure to SEP-CyLE. The pretest was administered to the students in both sections at week 1, and the posttest was administered for both sections at the conclusion of the same semester, week 14. Students in both sections were exposed to SEP-CyLE; however the only difference is that students in Section A were not explicitly assigned or instructed in learning any software testing concepts or assignments using SEP-CyLE.

The number of participants were chosen based on the recommendations provided in the literature, and summarized in Nielsen's work in [21, 36]. Nielsen outlines the number of participants needed for the study based on a number of case studies, as follows:

-

•

The usability test using cognitive walkthroughs: you need at least 5 users to find almost as many usability problems as you'd find using more user participants.

-

•

The heuristic evaluation: you need at least 20 users to get statistically significant numbers in your final results.

As shown in Table 4, a total of 51 students participated in the pre/posttest study. A 20 students participated in the cognitive walkthrough evaluation from Section A, and 25 students participated in the heuristic evaluation and the SEP-CyLE UX survey from Section B. Again, there were no substantive differences in terms of demographics and course preparation between the subjects in both sections. While both sections were exposed to the same lecture and other course contents and SEP-CyLE, the subjects in Section B (treatment group) were instructed explicitly to the software testing learning objects provided by SEP-CyLE. On the other hand, students in Section A (control group) just used SEP-CyLE as a cyberlearning environment to conduct their cognitive walkthrough usability inspection method without any explicit instructions to study the software testing LOs, tutorials, quizzes provided by SEP-CyLE.

4. Experimental design

The following subsections describe the experimental design aspects of evaluating the SEP-CyLE's utility and usability and ease of use for the proposed cyberlearning evaluation framework.

4.1. Utility evaluation

4.1.1. Pre/posttest instrument design

The pre/posttest instrument that we used and the SEP-CyLE survey are both available for download at https://stem-cyle.cis.fiu.edu/under the publicationstab. The pre/posttest was designed to identify students’ knowledge of software testing prior to being exposed to the learning objects in SEP-CyLE and after being exposed to the learning objects in SEP-CyLE (just in Section B). The eight questions in the pre/posttest focused on the topics listed below:

-

•

Q1 — The objective of software testing.

-

•

Q2 — Identification of different testing techniques.

-

•

Q3 and Q4 — Use of testing tools related to unit testing, functional testing, and code coverage.

-

•

Q5 and Q6 — Familiarity with other online testing resources.

-

•

Q7 and Q8 — Importance of using testing tools in programming assignments.

4.1.2. SEP-CyLE UX survey design

To create our SEP-CyLE survey, we adapted and modified the original survey created by Clarke et al. [5] 3. Clarke used this survey to evaluate the previous two versions of the SEP-CyLE: WReSTT v1 and WReSTT v2. The WReSTT survey consists originally of 30 questions divided into five groups, as follows:

-

•

Group 1 (1 question) — focused on the use of testing resources other than WReSTT.

-

•

Group 2 (14 questions) — focused on the overall reaction to the WReSTT website. These questions were created first by Albert et al. [37] in 2013, then adapted by Clarke et al. [5] to compare the overall reaction for both implementations of WReSTT (the initial version of SEP-CyLE). Here, we adapted the same questions, then modified these questions to evaluate SEP-CyLE.

-

•

Group 3 (6 questions) — evaluate the impact of using WReSTT on learning software testing concepts and tools.

-

•

Group 4 (5 questions) — focused on evaluate the impact of using collaborative learning components in WReSTT. We modified these questions to evaluate SEP-CyLE's collaborative learning component.

-

•

Group 5 (4 questions) — open-ended questions to provide feedback on the different versions of WReSTT.

In our proposed SEP-CyLE survey, we just used Groups 2, 3, and 4, with a total of 25 questions.

4.2. Usability evaluation

4.2.1. Cognitive walkthrough design

Throughout the evaluation, participants were asked to verbalize their responses to all questions. Before beginning the cognitive walkthrough, we asked the participants to develop a strategy that they would expect to use in order to achieve the given goal and verbally explain the strategy they would follow to the evaluator. The evaluator then asked three questions before the participants took any action on each screen. The three questions were:

-

1.

What is your immediate goal?

-

2.

What are you looking for in order to accomplish that goal?

-

3.

What action are you going to take?

Participants could respond to the first question based on the task they were assigned, but are more likely to respond with an intermediate goal. This could include responses such as “find the learning object" or “find a software testing tutorial". When participants respond to the second question, they would respond with the type of control they are looking for, such as a button or menu selection. The third question would be answered when the participants have decided what they intend to interact with, such as clicking a button or typing in input.

After these questions were answered, the participant completed the action and the evaluator asked the following two questions:

-

1.

What happened on the screen?

-

2.

Is your goal complete?

Participants responded to the first question based on what they perceived on the screen. Their response may be along the lines of “nothing", in such cases when they attempt an invalid action. However, a typical response is more likely to be “the screen is changed" or “an error message appears". Participants often answer the second question with a “yes" or “no" response. These steps were repeated until participants completed the primary tasks we provided them for evaluation. The evaluator noted observations of any usability problems during the cognitive walkthrough process. Additionally, we used video recordings to capture both audio and on-screen actions to facilitate a more detailed analysis and comparisons after completion of each cognitive walkthrough.

We gave the users the following software testing related tasks to be completed during the cognitive walkthroughs:

-

1.

Identify names of the learning object (LO) assignments available

-

2.

Navigate through one LO assignment

-

3.

Identify the name of that assignment

-

4.

Identify the number of pages provided in the content of this assignment

-

5.

Take the quiz assigned by this learning assignment

-

6.

Identify the number of questions provided in this quiz

-

7.

Identify the final score you have after completing this quiz

-

8.

Identify the number of tutorials provided by this LO

-

9.

Navigate through one tutorial

-

10.

Identify the name of this tutorial

-

11.

Identify the number of videos provided with the tutorial

-

12.

How many external tutorial links are available

For Tasks 4, 6, 8, 11, and 12, the participants were expected to respond with a number representing a count of “How many". Tasks 1, 3, and 10 required participants to respond with assignments and tutorials names. Task 7 required users to respond with the quiz final score. Participants were not asked to provide justification for their responses by the evaluator, although participants can explain their actions as long as these explanations are unsolicited.

4.2.2. Heuristic evaluation design

In order to reduce bias, we asked a group of participants different from those who completed the cognitive walkthrough to complete this evaluation. Our heuristic evaluation survey that was distributed digitally asked users to answer a series of standard questions regarding usability of SEP-CyLE using a Likert scale [38, 39]. The usability questions focused on three primary areas: visibility, affordability, and feedback. However, the survey also included secondary questions to gain more insights into the aspects of navigation, language, errors, and user support. The questions we used were from Nielsen's work [21], which we break into categories as listed below:

Visibility of system status

-

1.

It is always clear what is happening on the site?

-

2.

Whenever something is loading, a progress bar or indicator is visible?

-

3.

It is easy to identify what the controls are used for?

Match between system and the real world

-

4.

The system uses plain English?

-

5.

The website follows a logical order?

-

6.

Similar subjects/items are grouped?

User control and freedom

-

7.

The user is able to return to the main page from every page?

-

8.

The user is able to undo and redo any actions they may take?

-

9.

Are there needless dialog prompts when the user is trying to leave a page?

Consistency and standards

-

10.

The same words are used consistently for any actions the user may make?

-

11.

The system follows usual website standards?

Error prevention

-

12.

The system is error free?

-

13.

There are no broken links or images on the site?

-

14.

Errors are handled correctly, if they occur?

Flexibility and efficiency of use

-

15.

Users may tailor their experience so they can see information relevant to them easily?

Help users recognize, diagnose, and recover from errors

-

16.

Errors are in plain text?

-

17.

The problem that caused an error is given to the user?

-

18.

Suggestions for how to deal with an error are provided?

Help and documentation

-

19.

Help documentation is provided to the user?

-

20.

Live support is available to the user?

-

21.

User can email for assistance?

Respondents answered these questions using a Likert scale consisting of five values (1–5) from strongly disagree (1) to strongly agree (5) [38]. This allowed for a more exploratory quantitative analysis of the data.

4.2.3. Network analysis design

Social Network Analysis, denoted by SNA, has emerged with a set of techniques to analyze social structures that highly depend on relational data in a broad range of applications. Many real-time data mining applications consist of a set of records/entities that solely emphasize the associations among the attributes. The graph-theoretic analysis of complex networks has gained importance to understand, model and gain more insights into the structural and functional aspects of the graph/network representation of such associations of data. It is possible to extract useful non-trivial information about the relationships between the transactional data by modeling the set of entities, their attributes and the relationships among these entities as networks. Han and Kamber [40] have studied the association rule mining. One of the most important techniques of data mining aims to extract important correlations, frequent patterns, and associations among sets of entities in the transaction databases. The measure of similarity among the transactions gives us a wealth of knowledge on identifying various communities of related transactions exhibiting common behavior in specific applications [41].

We use graph-theoretic analysis of the relational data collected to quantify the degree of relationship between pairs of variables to selectively measure the accuracy of the system under study. The 21 heuristic evaluation survey questions regarding the usability of SEP-CyLE representing the entities in the relational data is used for further analysis. We use Pearson Correlation Coefficient that computes the linear relationship between two variables as a measure of the strength of correlation for graph construction [42]. Given two variables and , is computed as a ratio of covariance of the variables to their standard deviations where is the number of variables as in Eq. (1).(1)

The values calculated using and represent their degree of correlation in the range -1 to +1 inclusive. The results and discussion of the graph-based analyses are presented in Section 5.2.3.