1. Introduction

Many countries, including the United Kingdom, have an ageing population, with an increase in the average age and proportion of older people [1]. In 2010, there were approximately 10 million people over the age of 65 in the United Kingdom, with this number projected to rise by over 50% by 2020 [2]. One consequence of the ageing population is an increase in life expectancy implying greater healthcare needs. However, the relationship between age and dependency is complicated and not determined by age alone. Indeed, the risk factor profile of those born more recently is worse than previous generations [3]. This can be attributed, in part, to the link between economic development and increased risky behaviours [4]. Risk factors such as tobacco and alcohol use, inactivity, and poor diet choices are associated with chronic diseases including obesity, cardiovascular disease, and diabetes [4].

Recent advances in wearable technology including microelectromechanical (MEM) devices, physiological sensors, low-power wireless communications, and energy harvesting, have set the stage for a significant change in health monitoring. Technology can be discreetly worn and used as a means to monitor health and potentially enable older adults to live safely and independently at home. Early detection of key health risk factors enables more effective interventions to reduce the impact of, or even avoid, serious or chronic illness. Inertial measurement devices, such as accelerometers, represent a range of sensors that can be used for healthcare monitoring and are being extensively investigated for the monitoring of human movement [5] and daily activity [6]. Another application for wearable systems is rehabilitation [7]. There are also currently many systems commercially available for the monitoring of sports and some aspects of health.

The richness of data available using wearable sensors presents challenges in the way that it is processed to provide accurate and relevant outputs. To fully exploit this data for the purposes of healthcare monitoring, data fusion techniques can be employed to make inferences and improve the accuracy of the output. Hall and Llinas [8] provide a detailed introduction and discussion to multisensor data fusion. A review of data fusion techniques is also provided by Castanedo [9] including the different categories of data fusion techniques. With a focus on body sensor networks, Fortino et al. [10] discuss wearable multisensor fusion with an emphasis on collaborative computing.

This paper introduces wearable sensors for human monitoring in the context of health and well-being, including a snap shot of current commercial wearable sensor systems. An overview of data fusion techniques and algorithms is offered, including data fusion architecture, feature selection, and inference algorithms. These are put into the context of wearable technology for healthcare applications including activity recognition, falls detection, gait and ambulation, biomechanical modelling, and physiological sensing. Related challenges of data fusion for healthcare are presented and discussed.

2. Wearable sensors

Wearable sensors can be considered in three categories: motion, biometric, and environmental sensors. Sensors used to capture human motions include inertial sensors such as accelerometers, gyroscopes, and magnetometers. By combining a tri-axial accelerometer, gyroscope, and magnetometer, inertial measurementunits can be made for 9 degree of freedom tracking and are used for biomechanical modelling. Common biometric sensors are used to measure heart rate, muscle activation, respiration, oximetry, blood pressure, galvanic skin response, heat flux, perspiration, and hydration level. Electrocardiogram (ECG) and electromyography (EMG) detect the electrical activity produced by the heart and muscles respectively and are interpreted into heart rate and muscle activation.

For a wearable monitoring system to be practical it needs to meet several key criteria: to be non-invasive, intuitive to use, reliable, and provide relevant feedback to the wearer. The number of devices, location, and attachment method would be considered during design, and are usually application specific. Wearable sensor systems also have to take the target users’ needs, such as dexterity or cognitive ability, into account. Devices can be either attached directly to the skin using some form of adhesive, mechanically using a clip, strap or belt, or incorporated directly into clothing or shoes. Advanced fabrication techniques can now create ‘flexible/stretchable electronics’ for integrated circuits, electronics and sensors [11]. Such systems can be applied directly to the skin enabling discrete sensing possibilities e.g. devices developed by MC10 Inc. [12].

It is essential the system is reliable and measures with acceptable accuracy, providing the user with relevant feedback. In the research literature this is often presented as the accuracy of identifying specific events or health aspects, or in terms of selectivity and specificity, the proportion of the data that is positively identified correctly and the proportion of the data that is negatively identified correctly, respectively.

The past decade has seen major advances in sensing technologies, including MEMs and physiological sensors. Wireless low power communications, such as BLE, enable sensing technology to be integrated into wearable devices, clothing, and in the future embedded about the person without the restrictions of wires or the need to download data. Low power sensing and communications also enable wearable energy harvesting to be a viable option for powering and recharging these systems.

Commercially, wearable sensor systems are available for human monitoring and some of their output features are tabulated in Table 2. Much of the software developed for commercial devices is proprietary; however, some systems are able to provide raw data, or have been explicitly designed for the purposes of research. Table 3 describes wearable devices that are commercially available for activity, physiological, and biomechanical monitoring, including both consumer and research devices. The table presented gives a snapshot overview of commercial wearable devices as this is a wide and rapidly changing landscape, with the features monitored and the sensors used for daily monitoring, including a few examples for specific applications. Devices that only provide step count have not been included. A large proportion of these sensors target the health and fitness industry, and track the amount and intensity of activity performed including measures such as an estimate of energy expenditure and calories burned. For purposes of research however, a much broader range of outputs are being investigated and will be described in greater detail, including the techniques used to achieve them, in Section 5.

Table 1. Table of abbreviations.

| Abbreviation | Definition | Terminology |

|---|---|---|

| ADL | Activities of daily living | Medical |

| ANN | Artificial neural networks | Technical |

| BLE | Bluetooth low energy | Technical |

| COPD | Chronic obstructive Pulmonary disease | Medical |

| DT | Decision tree | Technical |

| ECG | Electrocardiogram | Medical |

| EEG | Electroencephalogram | Medical |

| EMG | Electromyography | Medical |

| GMM | Gaussian mixture models | Technical |

| HR | Heart rate | Medical |

| HRV | Heart rate variability | Medical |

| KF | Kalman filter | Technical |

| k-NN | k-nearest neighbour | Technical |

| MEM | Microelectromechanical | Technical |

| PF | Particle filter | Technical |

| QoL | Quality of life | Medical |

| SpO2 | Capillary oxygen saturation | Medical |

| SVM | Support vector machines | Technical |

Table 2. Output features from commercial health monitoring systems.

| Activity features | Biometric features | |||||

|---|---|---|---|---|---|---|

| Steps | Activity | Sleep | Heart | Breath | Head | Other |

| Step count | Lying, sitting, standing, stepping, walking, running | Duration | Heart rate (HR) /sec or min | Blood pressure | ||

| Cadence | Latency | HR (R-R intervals) | Number of impacts to the head | Glucose level | ||

| Average steps/day | Intensity: low, moderate, high | REM sleep duration | HR variability | Respiratory rate | Skin temperature | |

| Number of steps at moderate/ high intensity | Duration and percentage of time at each intensity level | Light sleep duration | HR zone | Intensity of head impacts | Perspireation | |

| Deep sleep duration | ECG | Blood oxygen level (SpO2) | EEG (Electroencephalography | |||

| Distance | Total exercise time | Toss and turn count | 20 mincardiovascular score | Head injury criteria | ||

| Elevations | Energy expenditure: kcal / MET.hr | Efficiency | 60 minendurance score | EMG (Electromyography Stress level | ||

|

|---|

2.1. Sensor placement

The placement of wearable sensors for health monitoring is motivated by three main driving forces: (1) what data is required or provided by the sensors; (2) where it is considered acceptable to wear the sensors; and (3) the number of sensors the user is willing to wear. For commercial systems the most common place to wear a sensor is on the wrist or arm although many systems can be worn at multiple locations, such as on the chest using a clip or as a pendent, and the thigh and ankle (Table 3). The waist and wrist are intuitive and unobtrusive places to wear sensors as many people are already accustomed to wearing watches or belts. In a study conducted by van Hess et al. [13] to investigate the estimation of daily energy expenditure using a wrist-worn accelerometer, the acceptability of wearing the device on the hip or wrist was also examined. It was found that both sensor placements were rated as highly acceptable, however, men on average preferred wearing the sensor on the wrist.

Systems with more niche applications need to be worn at more specific locations relevant to the information being acquired, e.g. the Reebok Checklight with MC10 helmet [14] that determines the number and severity of impacts to the head while participating in sports.

Sensor placement for activity recognition has been investigated in several studies. Atallah et al. [15] investigated the most relevant features and sensor locations for discriminating activity levels, demonstrating the dependence of sensor location on the activities being monitored. Liu et al. [16] investigated different combinations of sensors and locations for physical activity assessment. The “best” results, i.e. the ones giving the highest activity recognition accuracy, were obtained using all the sensors, followed by a combination of the wrist and waist worn sensors. Patel et al. [17] also investigated the different combinations of sensors for monitoring patients with chronic obstructive pulmonary disease(COPD) and again found the “best” results were obtained using all the sensors (in this case 10 accelerometers distributed about the body). The “best” single sensor location was found to be on the left or right thigh. Pärkkä et al. [18]conducted a study to determine which sensors are most information rich for activity classification and included both motion and physiological sensors. Accelerometers were found to be most informative for activity monitoring, however the position of the sensors (on the wrists) did not enable the separation of sitting and standing. Interestingly, physiological sensors did not prove as useful for activity monitoring due to the delay in physiological reactions to activity changes, whereas accelerometers react immediately.

Sensor orientation can also effect classification accuracy. Thiemjarus et al. [19]compared the performance of the k-NN (k-nearest neighbour) classifier using accelerometry data of activities with the sensor orientated in different directions. By transforming the signal to eliminate the orientation of the sensor an overall accuracy of 91% was achieved.

3. Data fusion

This section discusses data fusion models and the different levels of data fusion. A description of the possible types of features that can be extracted to characterise the data and techniques to select them are also described.

3.1. Data fusion models

A useful data fusion model is The Joint Directors of Laboratory model described by Hall and Llinas [8] that was developed to improve communications among military researchers and system developers. Work by Luo and Kay [20] define a hierarchical model consisting of four levels of abstraction at which fusion can take place; signal level fusion, pixel level fusion (for image data), feature level fusion, and symbol level fusion. Dasarathy [21] expanded on the hierarchical data fusion models by defining five fusion processes characterised by each processes input-output mode, e.g. data in - feature out fusion. For the application of healthcare many models have been suggested. Lee et al. [22]proposed a hierarchical model for the application of pervasive healthcare to minimise the probability of unacceptable error. Fortino et al. [10] described a framework for collaborative body sensor networks, C-SPINE. Gong et al. [23]proposed a multi preference-driven data fusion model and demonstrated its application for a wireless sensor network healthcare monitoring system.

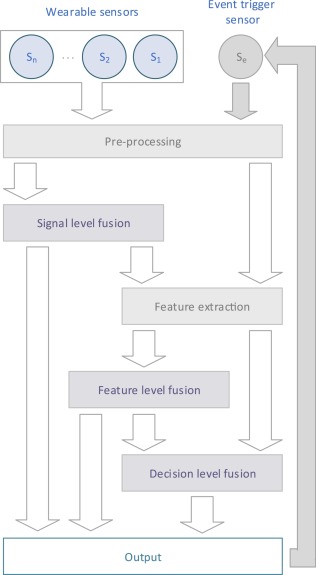

Fig. 1 describes a generic centralised hierarchical data fusion architecture for a wearable health monitoring systems, drawing on three of the data fusion levels of abstraction (signal, feature, and decision) and elements from the previously described models. Data is sampled from the sensors (at a frequency appropriate to the sensor type and application) and transferred to the fusion centre which may reside on a smart phone or a gateway. An obvious way to do this is by using wireless radio communications, such as Low Energy Bluetooth (BLE) or Zigbee. Alignment and cleaning of the data takes place at the pre-processing stage to take into account differences in sampling rates, timing offsets, and lost or corrupt data. Filtering would also take place at this stage. Data can then be processed at the appropriate level of fusion. Additionally, some sensors may operate by being activated by an event trigger which may be the result of the systems output. Potentially, in the case of a suspected fall detected using body worn accelerometry, a camera could be activated to gain additional context of the event.

Fig. 1. A data fusion architecture for wearable health monitoring systems incorporating concepts from [8] and [20].

Fig. 1. A data fusion architecture for wearable health monitoring systems incorporating concepts from [8] and [20].To interpret the sensor data three main hierarchical levels at which data fusion takes place are commonly used: signal level data fusion (sometimes referred to as direct or raw data fusion), feature level fusion, and decision (symbolic or inference) level fusion [8]. Signal level fusion can be applied to combine commensurate data i.e. data measuring the same property, directly. For example, to deduce kinematic parameters for biomechanical modelling, the Kalman filter (KF) can be used to estimate the state.

For data that is non-commensurate, fusion takes place at the feature level [8]. Features are extracted from the sensor data and used to form a feature vector that, after fusion, will result in a higher level representation of the data. If appropriate, output from the signal level fusion can be used as part of the feature vector. There are a wide range of parametric and non-parametric algorithms that can be used to classify the data into higher levels of abstraction, which will be described in further detail in Section 4.

Decision level fusion is performed at the highest level of abstraction from sensor data and can be based on raw data, features extracted from the raw data, and symbols defined at the feature level fusion to make higher level deductions. Probabilistic methods are commonly used at the decision level due to the high levels of uncertainty; however other methods that are also tolerant of uncertainty can also be used including artificial intelligence, fuzzy logic and genetic algorithms.

3.2. Feature extraction and selection

To combine data for the classification or detection of an activity or event characteristics, or features, are extracted from the sensor data as input for the data fusion algorithm. The features represent the information in the original signal and are usually calculated over fixed time windows that can range from 0.5 to 10 s long. Using a fixed window, an overlap in the data can be applied, with the effect of smoothing the output. Typically, a 1 s window is sufficient, with a 50% overlap with the previous window, however this is application dependent and a longer or shorter window maybe more appropriate. Features can be summarised into two main domains: time and frequency, however some features incorporate both temporal and frequency elements, such as wavelets [24]. A summary of some of these features can be found in Table 4.

Table 4. Example features that can be extracted from sensor data.

| Domain | Type | Feature |

|---|---|---|

| Time | Signal characteristics | Absolute value |

| Range | ||

| Maximum/minimum | ||

| Zero crossings | ||

| Derivative | ||

| Integral | ||

| Jerk | ||

| Root mean square | ||

| Root-sum-of-squares (or signal magnitude vector) | ||

| Surface magnitude area | ||

| Statistical characteristics | Mean | |

| Median | ||

| Variance | ||

| Standard deviation | ||

| Skew | ||

| Kurtosis | ||

| Interquartile range | ||

| Percentiles | ||

| Pearson coefficients | ||

| Cumulative histograms | ||

| Cross correlation | ||

| Entropy | ||

| Frequency | Fourier coefficients | |

| Energy | ||

| Power | ||

| Wavelet features | ||

| Power spectral density |

Feature selection describes the process by which features are chosen. This is sometimes based on empirical observation, however, search strategies can provide an objective means to select appropriate features. Search strategies fall broadly under two types; filter based, where the properties of the data are examined without knowledge of the inference algorithms to be used; and wrapper based that use the performance of the target learning algorithm to inform the set of features [25]. An introduction to feature selection has been provided by Guyon and Elisseeff [26]. For wearable sensor applications, selecting the most appropriate features can make a great difference to the quality of the inference. Atallah et al. [15] compared feature sets for activity recognition compiled using several filter based feature selection algorithmsincluding Relief and Simba, that aim to maximise the margins between decision boundaries, and minimum redundancy maximum relevance.

A common problem for multi-sensory systems is high dimensionality feature space which leads to increased computational costs and higher demands on memory. Algorithms such as independent component analysis and principal component analysis [24] can be used to reduce the dimensionality of feature space. Deep learning, offers an alternative approach building features at multiple levels of a deep network. While deep learning has often been applied to static data, Längkvist et al. [27] provided a review of deep learning for time-series data. Plötz et al. [28] compared different types of features used to represent human activity data including: statistical metrics, fast Fourier transform coefficients, principal component analysis based features, and those derived using deep learning methods. A standard nearest neighbour classifier, which will be described later, was used to demonstrate the effectiveness of the features.

For systems reliant on wireless communications, including body worn systems, power consumption also requires consideration i.e. the trade-off between transmitting raw data to the fusion centre vs. extracting features for transmission on the sensing device.

4. Data fusion algorithm overview

In the following sections an overview of the different types of data fusion algorithms are presented and examples given from the research literature. For feature level data fusion, non-parametric algorithms (that do not make assumptions regarding the distribution of the data) and parametric algorithms are presented. At the decision level, algorithms including Bayesian approaches, fuzzy logic, and topic models will be described.

4.1. Signal level algorithms

-

•

Weighted averages - is a simple signal level fusion method for combining commensurate information by taking an average of all the sensor readings [20]. The contribution of the “worst” sensor’s error will be alleviated in the final estimate, although not eliminate it completely. To reduce the impact of large erroneous sensor readings weighted averages can be used [24]. For example, the weighted average of physiological temperature measurements could be taken from an array of body worn thermistors to find a single best estimate.

-

•

The Kalman filter (KF) - is a popular statistical state estimation method that can be used to fuse dynamic signal level data. The state estimates of the system are determined based on a recursively applied prediction and update algorithm and assumes the state of a system at the current time is based on the state of the system at the previous time interval. One of the main advantages of the KF is that it is computationally efficient [29]. The KF is often used to fuse accelerometer and gyroscope information to provide better estimates, an example of which is the use of the KF to detect postural sway during quiet standing (standing in one spot with out performing any other activity or leaning on anything) [30] . For non-linear filtering the extended KF or unscented KF can be used.

-

•

Particle filtering (PF) - Particle filtering is a stochastic method to estimate moments of a target probability density, when they can’t be computed analytically. The principle is to generate random numbers called particles, from an “importance” distribution that can be easily sampled. Then, each particle is associated a weight that corrects the dissimilarity between the target and the importance probabilities. In the Bayesian context, particle filters are often used to estimate the mean of the posterior density. They have the benefit of estimating the full target distribution without any assumption, which makes them particularly useful for nonlinear /non-Gaussian systems. Djurić et al. [31] and Arulampalam [32] both provided a tutorial of PF theory. The PF can be used for biomechanical state estimation based on accelerometer and gyroscope data.

4.2. Feature level non-parametric algorithms

-

•

k-Nearest Neighbour (k-NN) - One of the simplest classification algorithms, k-NN measures the distance between the unlabelled observations and the training samples to infer which class they belong to. The unlabelled observation is assigned the label of its nearest neighbours where k is the number of training observations to be taken into account. Distance measures include the Euclidean and Manhattan distance. Use of k-NN has been widely used and reported in the literature for activity classification applications [15], [16], [19], [33], [34], [35], [36], [37]. Bicocchi et al. [37], in particular, compared k-NN to several other instance based learning algorithms using a real-life activity set and achieved a precision of about 75% with k equal to 1.

-

•

Decision Trees (DT) - DT or rule-based algorithms are a popular method used for classification. Rules are defined in the form of a “tree”, starting at the root that is split into decision nodes which refine the class prediction with each level of decision nodes. Leaf nodes represent the predicated class of the unknown data [5]. DT can be constructed manually by empirically defining rules; however, algorithms are available to automatically generate trees based on the data such as ID3 and C4.5. Other DT algorithms include CART, random tree, random forest, and J48. Examples of the use of DT for activity recognition include [17], [18], [34], [35], [38], [39], [40], [41].

-

•

Support Vector Machines (SVM) - SVM have been extensively used for human activity classification [16], [17], [36], [39], [42], [43] and can be used for both linear and non-linear classification problems. SVM is a binary classifier finding separation between two classes. The data is mapped into a high dimensional space using a kernel function (such as a Gaussian, sigmoid, or radial basis function). A hyperplane is then found that maximises the decision boundary between the examples of the classes [44]. In a comparative study by Liu et al [16] to determine the best sensor configuration to recognise activities, SVM performed better than the k-NN and Naive Bayes classifiers with an accuracy of 76% using a single hip worn accelerometer, to 88% using a hip and wrist worn accelerometer and a ventilation sensor that measures features associated with breathing.

-

•

Artificial Neural Network (ANN) and Deep Learning - An ANN is a biologically inspired computational model to describe functions consisting of a network of simple computing elements, or nodes [45]. An ANN structure is composed of several layers of nodes connected by weighted links. Inputs into the ANN are propagated forward through the layers to compute the output of the network, as follows: for each node, the sum of the weights multiplied by the input value of all inputs is found. The output for this node is then calculated by the activation function, such as the sigmoid function. To train the network, the internal connective weights are adjusted using techniques such as back propagation which minimises the error between the network’s output and the target output [45]. ANN have been applied to the problem of classifying human activity recognition; some examples include [18], [36], [46], [47]. Pärkkä et al. [18], Roy et al. [46], and Altun et al. [36] conducted studies to compare the performance of ANN to other algorithms. Yang et al. [47] implemented an activity recognition strategy based on two phase neural classification. During the first phase, activities are classified as either static or dynamic activities, then during the second phase more detailed activity recognition is performed. Recently, success with deep learning methods, based on neural networks, have attracted interest from many domains including image classification and natural language processing[48]. As mentioned previously, deep learning can be used to learn features for activity recognition [28], and as well as perform classification.

4.3. Feature level parametric algorithms

-

•

Gaussian mixture model (GMM) – GMM can be used as a parametric classifier by modelling the probability distribution of continuous measurements or features. A GMM consists of a weighted sum of Gaussian distributions that can be trained with example data using algorithms such as expectation-maximisation (EM) [38], [49]. A GMM is trained for each class, then the new data examples are classified by determining the GMM that provides the highest likelihood of producing the data. Allen et al. [38] used GMM to distinguish postures and movements for the monitoring of older patients based on accelerometer data, comparing it to the performance of a heuristic DT system. Wang et al. [49] classified five gait patterns using GMM.

-

•

k-Means – k-means is an unsupervised iterative distance-based clustering algorithm. It aims to classify data based on the distance of a data point to the mean centroid of each cluster. The classifier is trained by defining kcentroids, one for each cluster. These can be defined randomly or by defining the initial centroid based on all the training data and subsequent centroids using the data points furthest away from the initial centre [24]. An iterative process is then used to minimise the distance of the centroids from the data points. Each data point is assigned to the nearest centroid, after which the centroid is recalculated based on the clusters that are formed. This process is repeated until the criteria to stop have been met. After this process, data for classification is assigned to the closest centroid. Ghassemzadeh et al. [33]used k-means clustering to define motion primitives which, in combination, form transcripts that can be used for activity recognition. Machado et al. [50]applied k-means clustering to the problem of activity recognition using accelerometry successfully predicting activities with an accuracy of 89% for the user independent case.

4.4. Decision level algorithms

-

•

Bayesian inference - Approaches, based on Bayes theorem, relate the posterior probability, i.e. the probability of the hypothesis occurring given the observations (or features), the prior probability of the hypothesis, and the likelihood, i.e. the probability of the observations given the hypothesis. Bayesian methods enable the inclusion of prior probabilities that can take into account known information and can be updated based on the observations. The Naive Bayes classifier is a popular method for inferring activity from sensor data. Despite the assumption of independence between features, which is often considered poor, it can perform well. Atallah et al. [51] used Bayesian classification for activity recognition from an ear worn accelerometer based device. One drawback of Bayesian inference is the requirement that competing hypotheses are mutually exclusive, however, this is not generally compatible with the way humans assign belief [24]. Dempster–Shafer theory, also known as belief function theory or evidential reasoning, provides a framework for reasoning with uncertainty by extending the Bayesian approach [24].

-

•

Fuzzy logic - or fuzzy set theory, is a fusion technique that can be applied at the decision level and have been used for the recognition of human activities using both wearable and ambient sensors [52], [53]. Fuzzy logic describes input data in terms of possibility, i.e. the possibility the input data describes some property [24]. Medjahed et al. [53] describe three main steps for the application of fuzzy logic. First, fuzzification takes place converting the data into fuzzy sets. Secondly, a fuzzy inference system is applied which consists of fuzzy rules that take the IF/THEN form and fuzzy set operators including the union, complement and intersection [24]. Finally, defuzzification is applied to convert fuzzy variables generated by the process into real values.

-

•

Topic models - are an unsupervised machine learning algorithm originally designed for aiding understanding of large corpuses of text. They allow hidden thematic patterns in a dataset to be discovered using latent Dirichletallocation. Huynh et al. [54] showed that Topic Models could be used to discover routine behaviours (e.g. lunch) from other activities (e.g. queuing, eating). Seiter et al. [55] further investigated the robustness of Topic Models for daily routine discovery by varying the characteristics of simulated datasets based on the original data collected by Huynh et al. and identified optimal values of dataset properties required to achieve good performance stability.

5. Applications of data fusion for health monitoring

5.1. Activity recognition

Activity monitoring using wearable technology has received a vast amount of attention. A person’s level of functional mobility can directly reflect quality of life (QoL) and overall health. From information provided by wearable sensors, feature level data fusion techniques and inference methods can be used for activity recognition at different levels of detail: activity intensity levels, static and dynamic postures, and activities of daily living (ADL).

Static postures refer to activities which are globally still, such as lying and sitting, where as dynamic postures refer to activities during which someone is actively moving, such as bipedal activities and during transitions, e.g. moving from sitting to standing. Standing can be referred to as a dynamic activity, e.g. [19], or a static activity, e.g. [56], depending on the perspective and application. Standing is a globally stationary activity, however, to maintain a standing posture active work is required on the part of the person. Corrective movements are continuously made which can be detected using a trunk worn accelerometer and have been used to investigate standing balance [57]. In contrast to maintain static postures such as sitting or lying, no active work is required on the part of the person. There are links between health and the amount of dynamic activity a person performs in the form of physical activity, such as walking, thus, even simple measures can provide insight into well-being [58]. Static and dynamic postural information can be used to determine the time spent in various positions and the amount of dynamic activity being carried out.

ADL describe in greater detail the essential tasks of daily living. The ability with which individuals can perform these tasks are commonly assessed using questionnaires [59]. The research literature reflects the interest in using body-worn sensors to identify these activities, which can be treated either as individual activities [37] or by dividing the ADL into the levels of physical intensity each activity requires [51].

It can be seen from the research literature that accelerometers are the most widely used sensors for these applications. Exceptions include Pawar et al. [60], who performed body movement classification using artifacts present in wearable ECG signals, and Roy et al. [46] who combined surface EMG with accelerometers for activity recognition. Gyroscopes are also used for activity recognition, although not as frequently. Potentially this is due to their high power consumption while accelerometers can operate at very low power making them attractive for battery powered systems. An in-depth review of the technology used in wearable systems for health applications can be found in a review by Lowe and OLaighin [61].

It is worth noting that heuristic algorithms are often employed and used to great effect for activity recognition. These can be used alone or in conjunction with other data fusion techniques. For example, thresholds can be used to define the limits between one state and another, distinguish between periods of static and dynamic activity, and identify posture [19], [56], [62], [63], [64], [65]. Culhane et al. [64] used two bi-axial accelerometers attached to the thigh and sternum and by applying a threshold to the standard deviation of the sensor data, it could be determined if the wearer was static or dynamic. During static activities, posture was inferred using the accelerometer by measuring the tilt of the trunk and thigh. Dalton et al. [65] compared the mean of accelerometer data to thresholds that had been pre-defined to differentiate between activities.

There are a wide range of approaches used for general activity recognition, however some studies are more disease specific. Tsipouras et al. [66] developed a method for the automatic assessment of levodopa-induced dyskinesia for patients living with Parkinson’s disease. Using data from body worn accelerometers and gyroscopes, levodopa-induced dyskinesia could be detected and the severity assessed. Salarian et al. [67] and Rodriguez-Martin et al. [43]also investigated the use of activity classification for Parkinson’s disease using fuzzy classification and SVM, respectively. Other participant cohorts that were the focus of different studies include: those who had recently been in hospital [62], rehabilitation [64], stroke [46], and COPD [17], [68].

5.2. Fall detection and prediction

Fall detection, often performed in conjunction with activity recognition [63], [69], [70], is another widely researched application for wearable sensing technology. The incidence of falls and the risk of injury due to a fall increases as people age, affecting QoL and confidence. After a fall, it may not be possible to call for help or attract attention which could result in a sustained period of time without assistance. During this time, dehydration, hunger, and injuries sustained during the fall can lead to prolonged hospital stays and potentially prove fatal.

Heuristics are often employed for fall detection including work by Bourke et al. [71] who investigated fall detection using 2 tri-axial trunk and thigh worn accelerometers. The resultant was calculated for both accelerometers and an upper falls thresholds applied capable of identifying 100% of falls from normal activities. In subsequent work, Bourke et al. [72] applied thresholds to the resultant of the angular velocity from a trunk mounted gyroscope. Karantonis et al. [63] used a single waist worn accelerometer and thresholds to determine activity, rest, posture and falls. Benocci et al. [73] also conducted falls detection using an accelerometer attached to the sacrum and simulated falls from standing, walking, out of bed, and sliding down a wall. Wang et al. [74]described a three-fold threshold system that combine a trunk worn accelerometer and cardiotachometer to detect falls. The thresholds test for high accelerometer values, angle of the trunk, and heart rate to detect a fall.

One of the greatest predictors of a fall is having fallen previously, therefore it is of equal importance to be able to predict a fall such that preventative measures can be put in place. As well as the detection of falls, work by Giansanti et al. [75]used wearable sensors to determine the risk of falls using 60 s balance tests. An accelerometer and gyroscope were worn on the trunk and a four layer ANNwere used to classify participants into fall risk levels.

5.3. Gait and ambulatory monitoring

Gait analysis can provide insight into functional mobility, ranging from the ability to perform various bipedal activities to a detailed account of the gait cycle. Gait analysis and biomechanical modelling are traditionally performed in laboratory environments using optical motion capture to track body segment motion. More recently body worn inertial devices have been investigated as an alternative, eliminating the need to collect data in specialised laboratories. Biomechanical modelling of the lower body could be used to build unique gait models such that deviations from the norm could indicate the need for treatment or intervention.

Moe-Nilssen and Helbostad [76] used a low back mounted accelerometer to monitor gait variability in the anterior-posterior and mediolateral plane, and estimate cadence, step, and stride length over a known distance and was used to differentiate between fit and frail older adults. Xu et al. [77] examined the walking parameters of those recovering from stroke with a hemiparetic gait for rehabilitation purposes. A hierarchical approach using Naïve Bayes and dynamic time warping methods were used to classify walking, then gait parameters are computed including walking speed, cadence, stride length, and distance travelled.

In the clinical environment, gait has been used to predict the risk of falling using tools such as the Tinetti gait and balance assessment [78]. Body-worn sensors could be used as an alternative or complementary assessment. Caby et al. [79] collected accelerometry data from 10 sensors during a walking test and the Timed Up-and-Go for the objective classification of fallers and non-fallers. Accelerometry and force sensitive resistors have also been used to distinguish between normal and abnormal gait [80]. Ishigaki et al. [81]determined pelvic movement from an accelerometer and gyroscope mounted on the sacrum during 10m of free walking to find correlations with stability in older adults. Less pelvic motion was found for those classed as unstable based on a single leg balance test.

The differences in bipedal locomotion styles imposed by environmental conditions such as a flat or sloped surface, and stairs are subtle. The ability to negotiate these conditions can be an indication of physical well-being and used to monitor those with limited mobility. To this end, Wang et al. [82]decomposed the acceleration data from a single waist mounted sensor into frequency features using wavelets to classify the different walking patterns using a multilayer perceptron neural network. In further work, Wang et al. [83]included walking up and down two different gradients and used GMM for classification. Lau et al. [84] focused on walking conditions for those with uni-lateral drop foot and deployed two accelerometers and a single gyroscope on the affected side to distinguish the aforementioned conditions and compare classification results from several data fusion methods. Muscillo et al. [85]adopted an adaptive Kalman-based Bayes estimation method to differentiate between locomotor conditions for both young and older adults.

By analysing gait events, such as heel contact, heel-off, and toe-off, body-worn sensors can be used to characterise gait for applications such as drop foot stimulation [86]. Kotiadis et al. [87] investigated gait phase detection for drop foot, exploring trigger timings for a stimulator. For those suffering from Parkinson’s disease and multiple sclerosis gait disturbances, such as freezing of gait, can be an indication of a higher risk of a fall. Tripoliti et al. [88] used body worn accelerometers and gyroscopes for the automatic detection of freezing of gait. Accelerometers can also be used to recognise an individual’s gait [89]which in a multi-resident home or scenario where sensors are shared could aid identification of the wearer.

5.4. Biomechanical modelling

Parametric state estimation algorithms, such as the KF and PF, can be used to measure biomechanical motions by combining accelerometer and gyroscope data to estimate the kinematic parameters. These algorithms come under the banner of signal level fusion methods as they combine commensurate data to achieve the best estimation of a parameter. Musić et al [90] used an extended KF to fuse inertial sensor data for the reconstruction of body segment trajectories in the sagittal plane of sit-to-stand motions.

Takeda et al. [91] presented a method for gait analysis by calculating the 3-dimensional position of each lower body segment using 7 tri-axial accelerometers and gyroscopes, joint-range-of-motion, the contribution of gravity to the accelerometer signals, and frequency features that describing the cyclic nature of walking.

Due to the high power consumption of gyroscopes other methods using multiple accelerometers are being developed such as the double-sensor difference algorithm presented by Liu et al. [92] for the measurement of rotational angles of human segments. Djurić-Jovičić et al. [93] used pairs of tri-axial accelerometers for the estimation of leg segment angles and trajectories in the sagittal plane through the removal of sensor drift.